Introduction

How can we enable more science fiction to become reality?

Looking to successful outliers from history is a good place to start. After digging into why DARPA works, I asked the follow-up question: how could you follow DARPA's narrow path in a world very different from the one that created it?

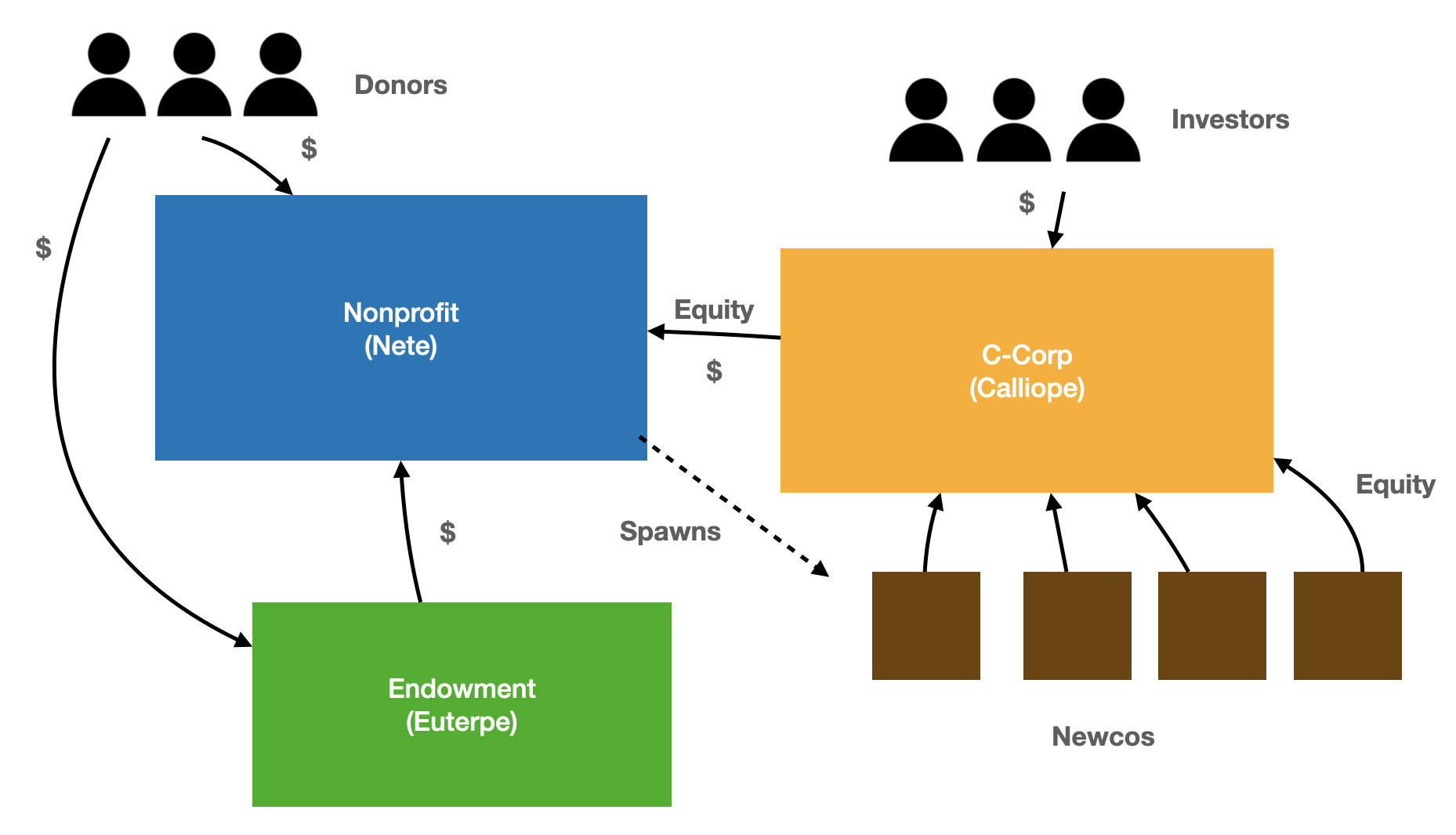

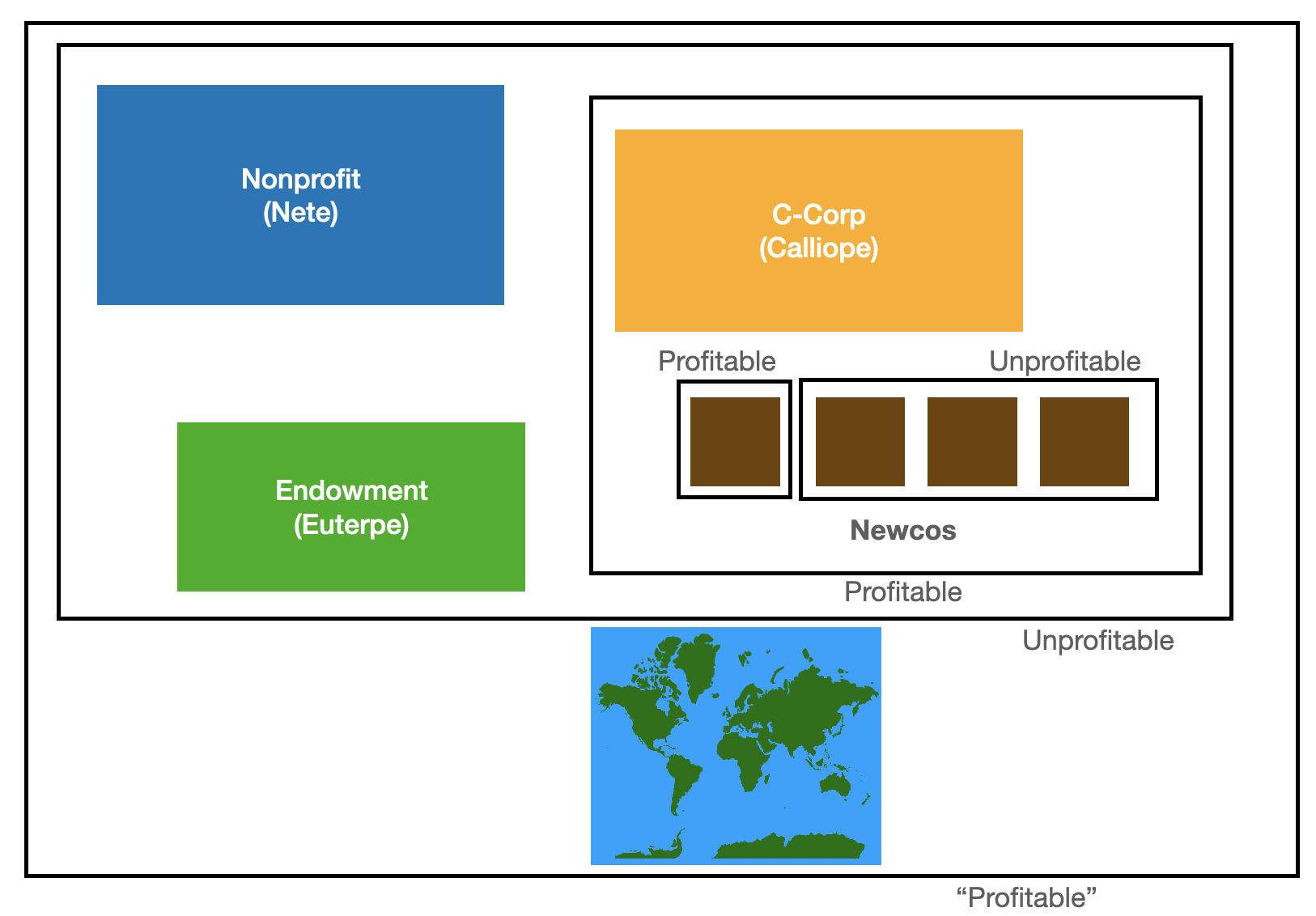

This piece is my answer. It both describes and provides a roadmap to actualize a hybrid for/nonprofit organization that leverages empowered program managers and externalized research to shepherd technology that is too researchy for a startup and too engineering-heavy for academia; taking on work that other organizations can't or won't by precisely mapping out blockers to potentially game-changing technology, creating precise hypotheses about how to mitigate them, and then coordinating programs to execute on those plans.

The proposal doesn't stand on its own — it needs a foundation of evidence and argument. This foundation spans many topics: from the role of value capture in technology creation to the now-defunct historical role of industrial labs, tactics for institutional longevity, and the nitty-gritty of how to fund operations and more.

No single organization can enable science fiction to become reality. Therefore, this document also serves as a user manual for others to build DARPA-riffs and other innovation organizations we cannot yet imagine.

There can be ecosystems that are better at generating progress than others, perhaps by orders of magnitude.

—Tyler Cowen and Patrick Collison, We Need a New Science of Progress

Take Home Messages

- A critical niche in the innovation ecosystem once occupied by industrial labs is unfilled.

- The current innovation ecosystem — academia, startups, and modern corporate R&D — do not cut it.

- A private organization that riffs on DARPA's model could fill this niche.

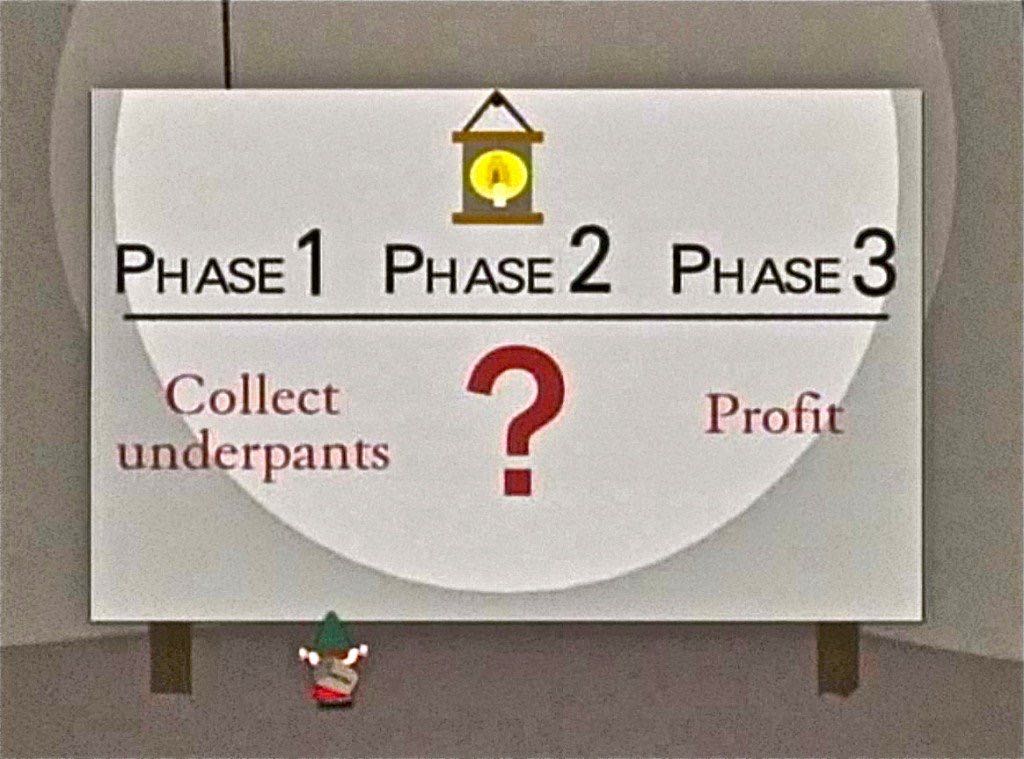

- Private ARPA (PARPA) will de-risk a series of hypotheses and go through three major evolutionary phases before it looks like its namesake.

- There are many tensions and incentive traps along the path to building any innovation organization. Describing them as precisely as possible may enable PARPA and other organizations to sail past them safely.

Master Plan

In short, PARPA's master plan is:

- Create and stress-test unintuitive research programs in a systematic (and therefore repeatable) way.

- Use that credibility to run a handful of research programs and produce results that wouldn't happen otherwise.

- Use that credibility to run more research programs and help them "graduate" to effective next steps.

- Make the entire cycle eventually-autocatalytic by plowing windfalls into an endowment.

Institutional Constraints in the Innovation Ecosystem

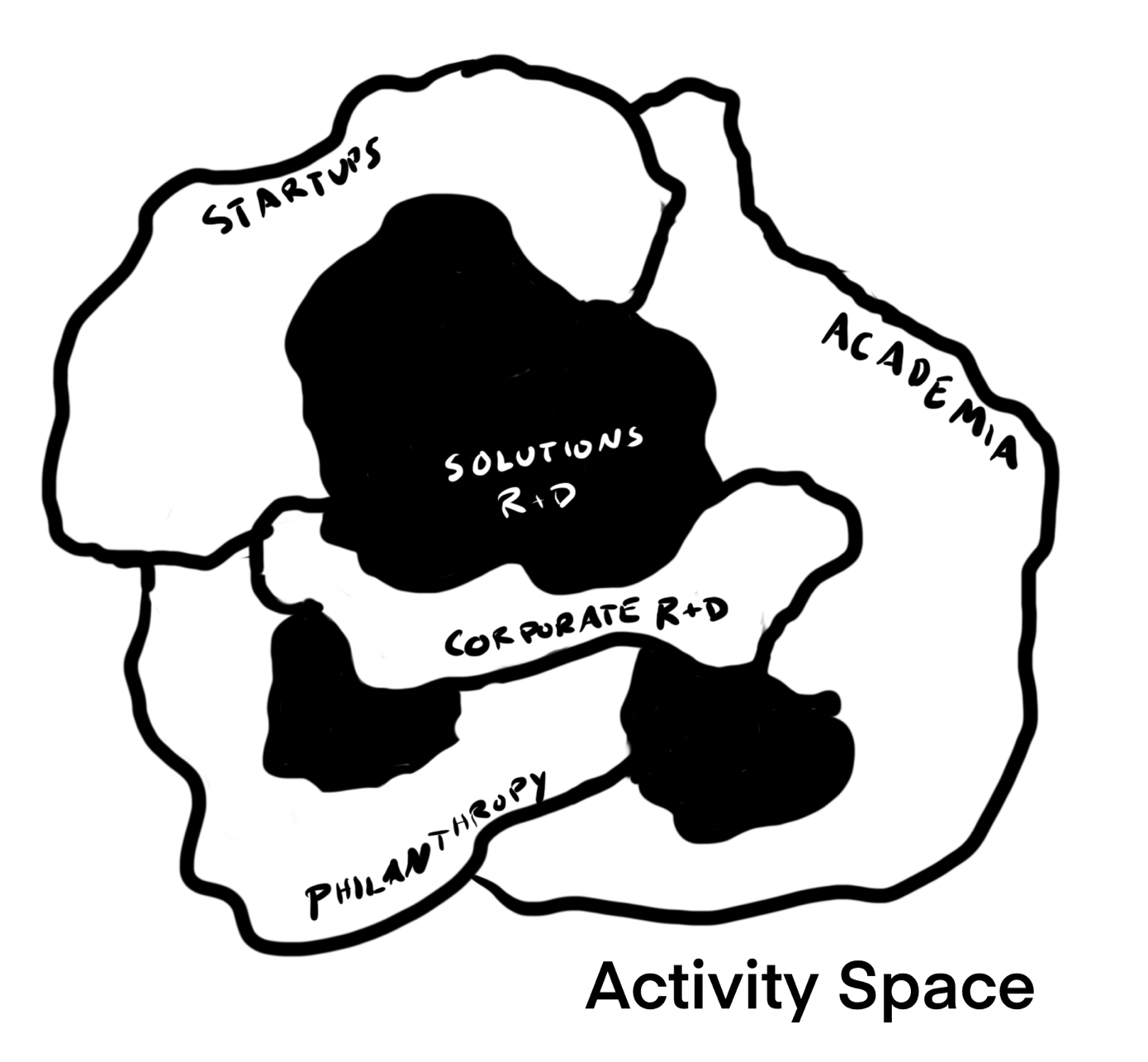

Different institutions enable certain sets of activities that we associate with innovation: Academia is good at generating novel ideas; startups are great at pushing high-potential products into new markets; and corporate R&D improves existing product lines. Together, these institutions comprise an "innovation ecosystem."

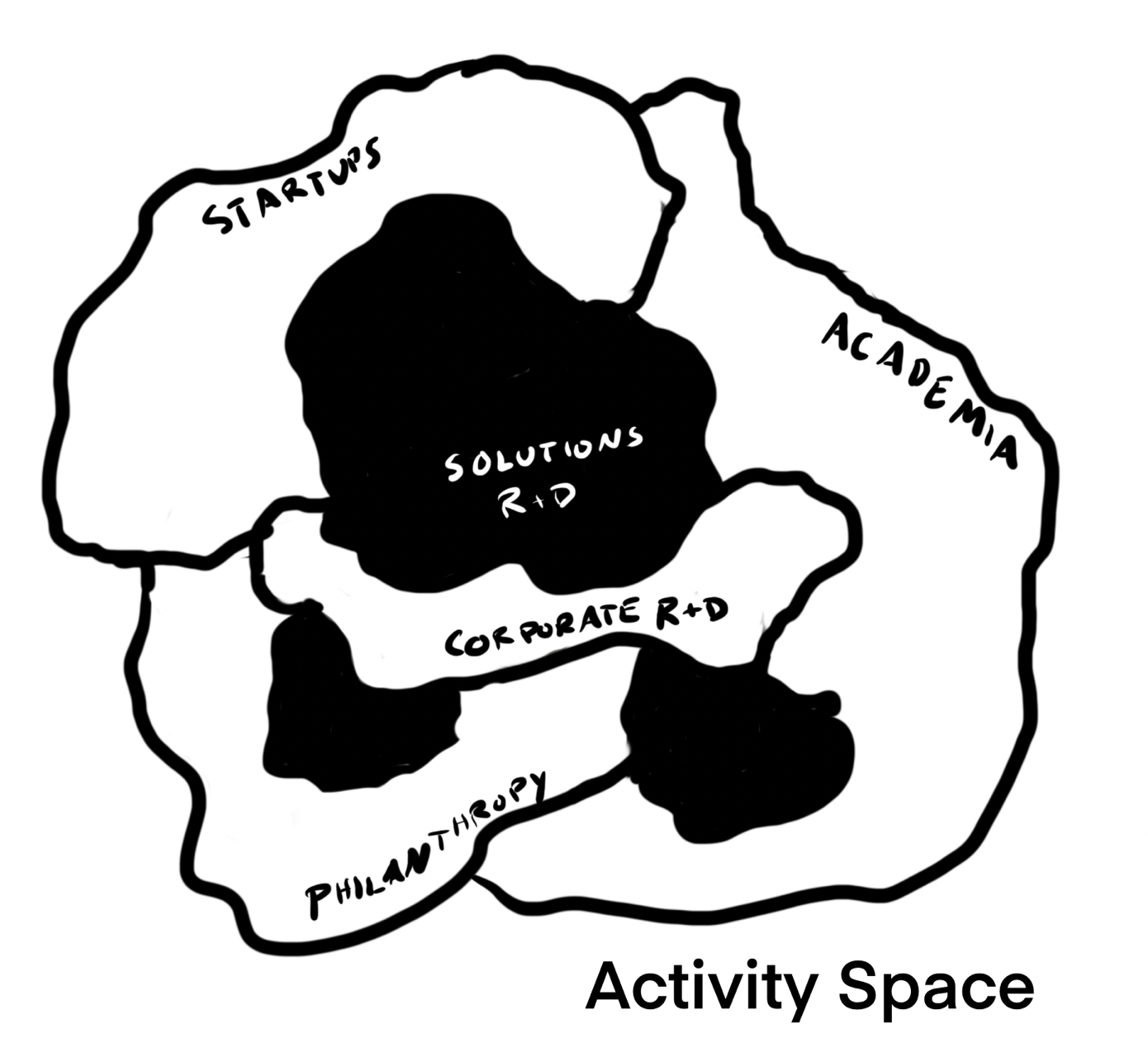

Every institutional structure has constraints that prevent it from engaging in certain activities well or at all. Imagine each institution occupying some area on a map of all possible activities — the institution is well suited to tackle the activities it covers and poorly suited to tackle activities at the edge or outside of its area. Some activities are covered by multiple institutions, but some aren't covered by any institutions. These activities are "constrained out" of happening by existing institutional structures.

The Missing Activities

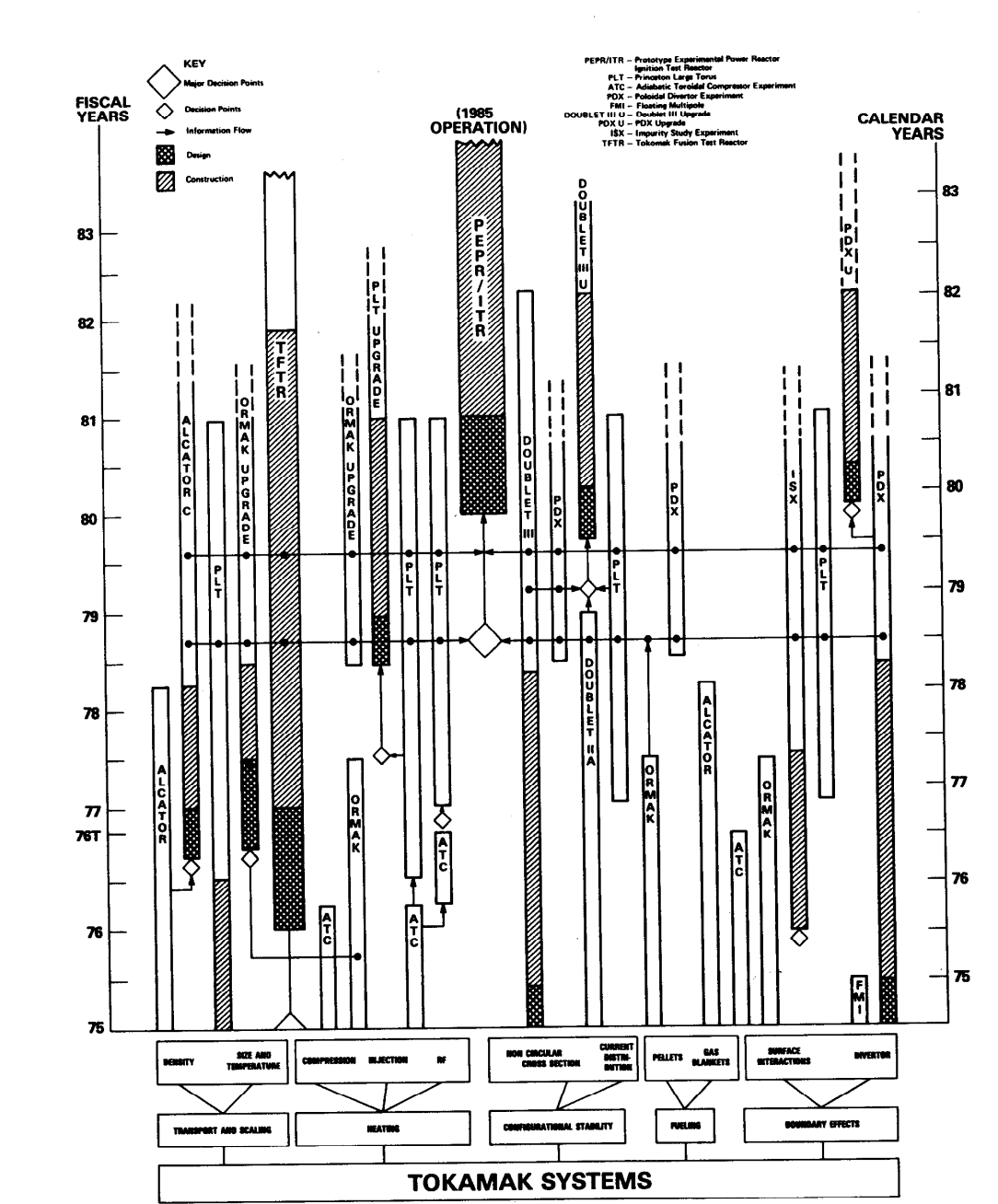

DARPA, alongside golden age industrial labs like Bell Labs, DuPont Experimental Station, GE Laboratories, and others, developed many technologies at the core of the modern world — from transistors and plastics to lasers and antibiotics. These labs all enabled certain activities that are heavily constrained in the modern ecosystem.

What allowed golden-age research orgs to produce such transformative technology?

Most significantly, these organizations simultaneously:

- Promoted work on general purpose technologies before they became specialized.

- Enabled "targeted piddling around," especially with equipment and resources that would not otherwise be available.

- Fostered collaborations among diverse individuals with useful specialized knowledge.

- Shepherded smooth transitions of technologies between different readiness levels, combining manufacturing with research to create novelty and scale.

- Supported projects with extremely long or unknown time frames.

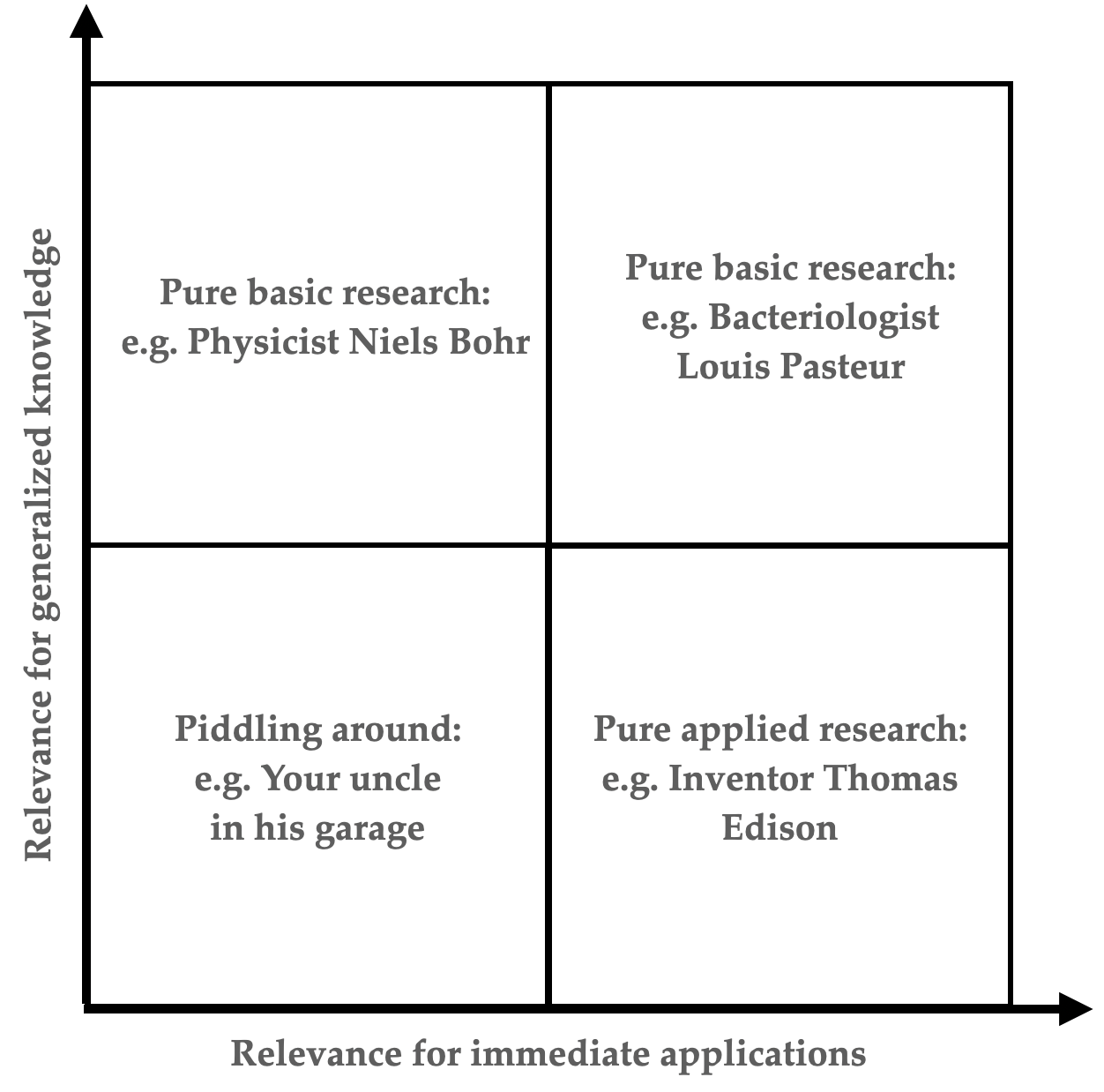

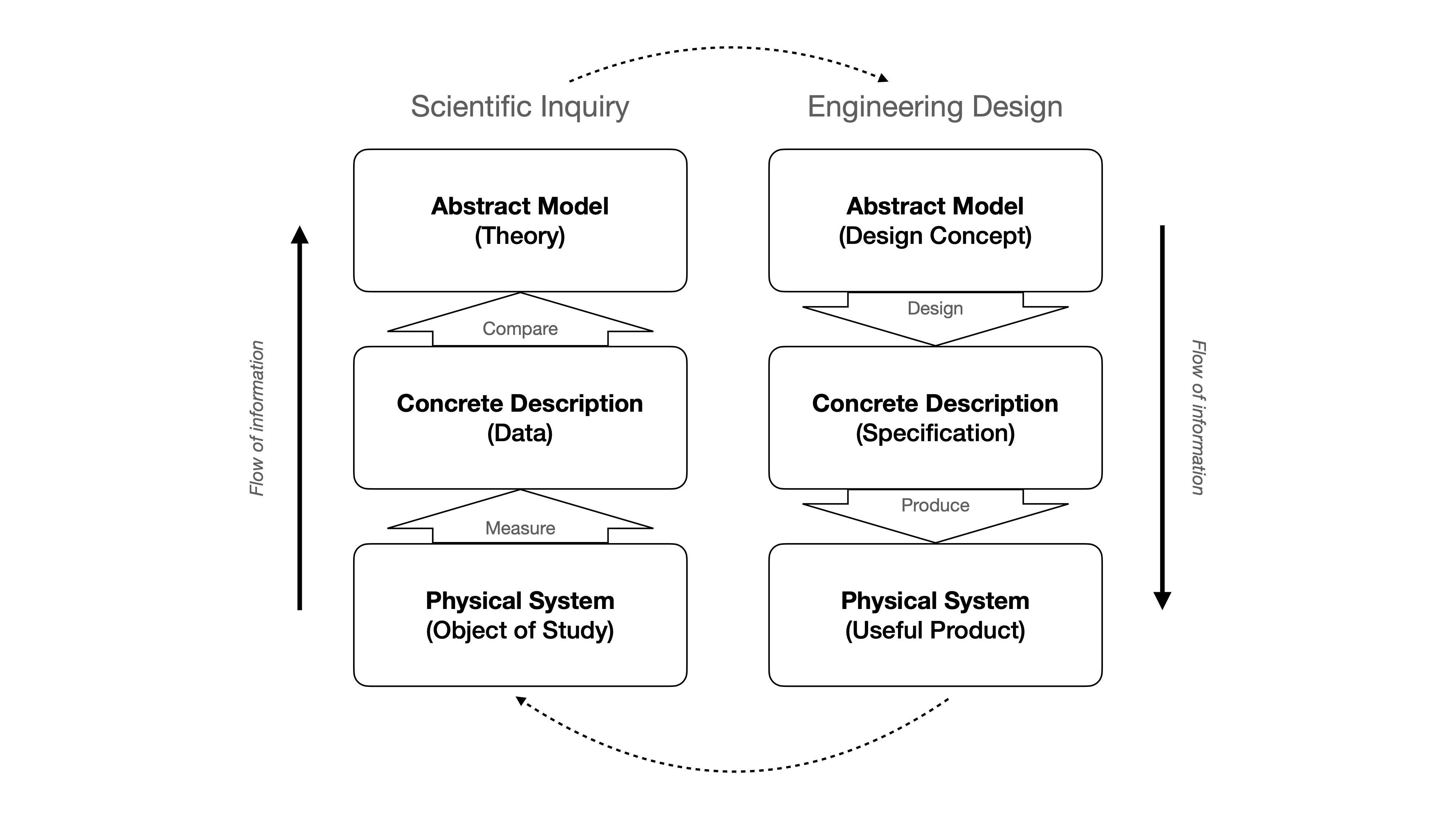

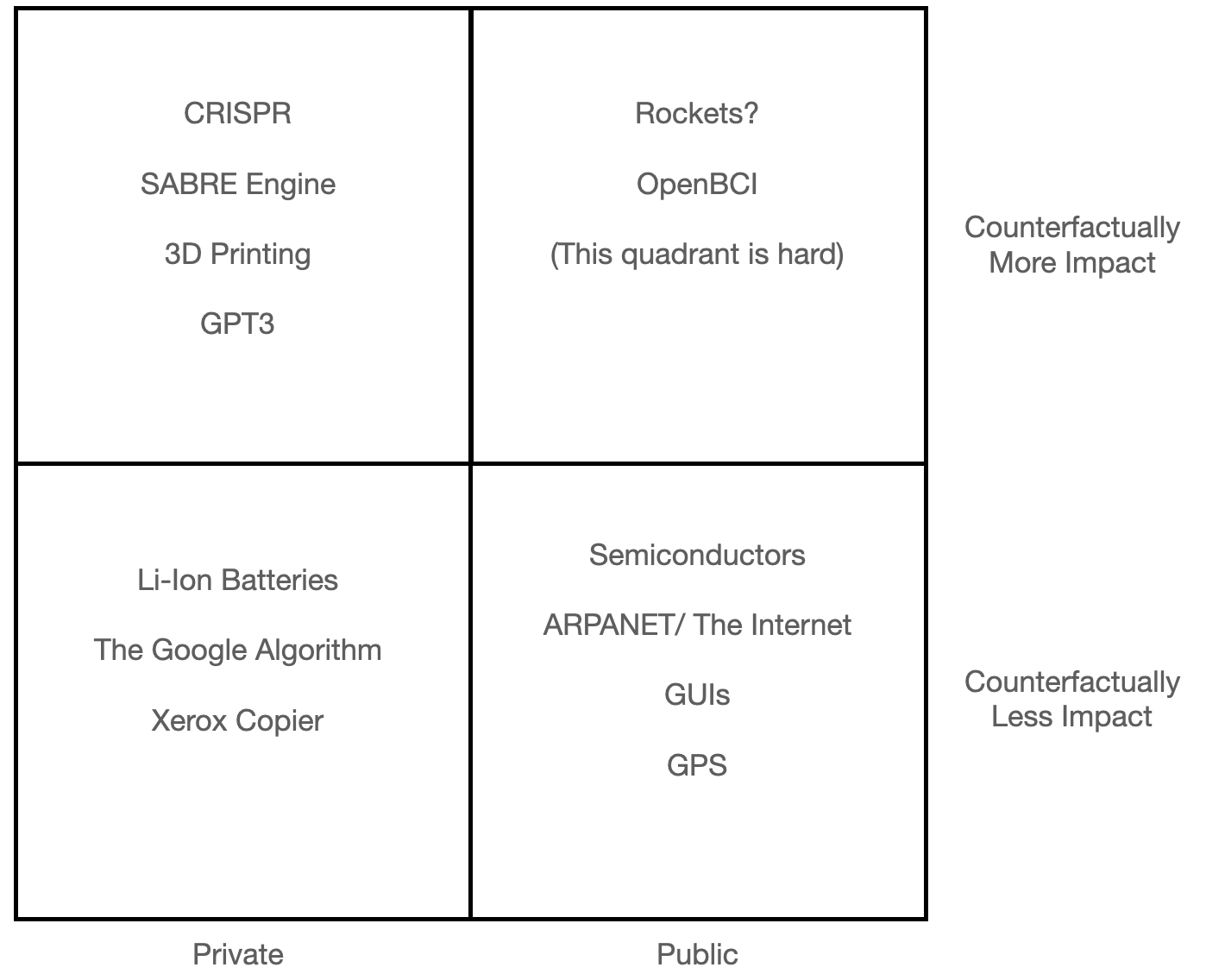

In short, they enabled simultaneous activity in all quadrants described in Donald Stoke's Pasteur's Quadrant :

Why DARPA and not Bell Labs?

Bell Labs may seem a more obvious model to imitate than DARPA, a government military research organization with a historically unique institutional structure. However, a closer look suggests that golden-age industrial labs required certain conditions that cannot be easily replicated today. The decline of corporate R&D was structural — it is the inevitable outcome of a shift in circumstances.

Tensions, Traps, and Other Topics

There are many fundamental tensions and incentive traps surrounding the path to building any innovation organization. Exploring them as explicitly and precisely as possible may enable PARPA and other organizations to navigate past them safely. This piece contains a multitude of hypotheses, theories, and intuition-pumps surrounding the central questions: How does the process of creating new impactful knowledge and technology work? And how can we do more of that better?

Along the way, we will discuss the relationship between innovation organizations and their money factories, the sales channel challenge for frontier technology, how mismatched Buxton Indexes can doom research impact, how to find researchers interested in working outside of existing institutions, speculative tactics for DARPA-riffs and other new innovation organizations, examples of the far-flung technologies that are unlikely to flourish within the status quo, and more.

However, if this document has three central topics, they are:

- How Money Works within an innovation organization dictates the range of incentives, activities, and potential outcomes.

- Legal Structure profoundly impacts the scope of an innovation organization's possibilities.

- Value Capture is the critical tightrope act of every successful innovation organization — research is expensive so insufficient funds equal institutional death, but value capture can kill the full potential of the work.

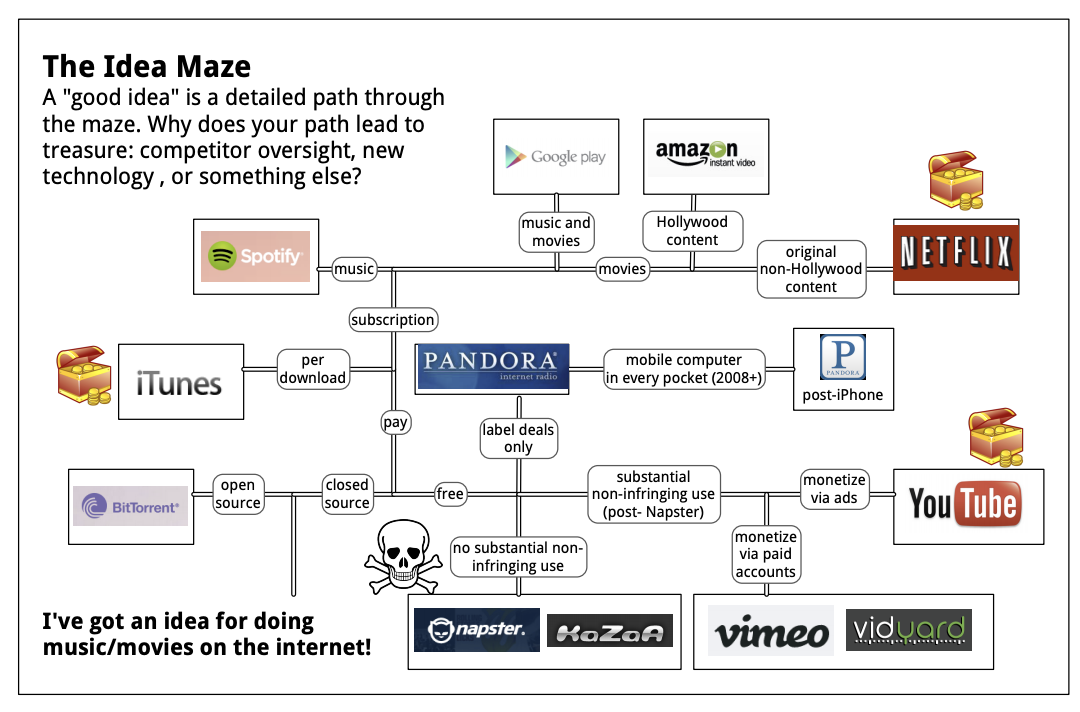

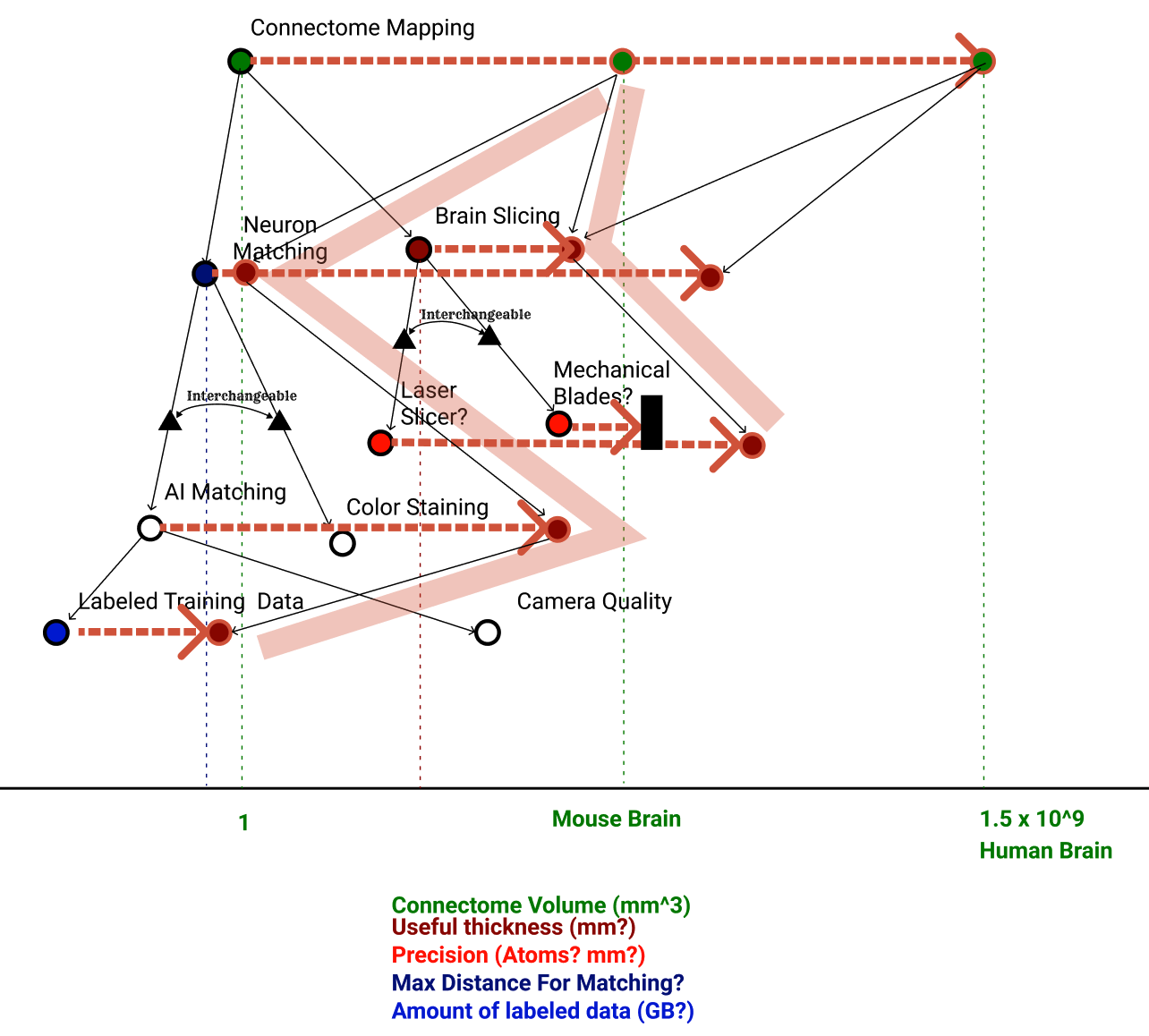

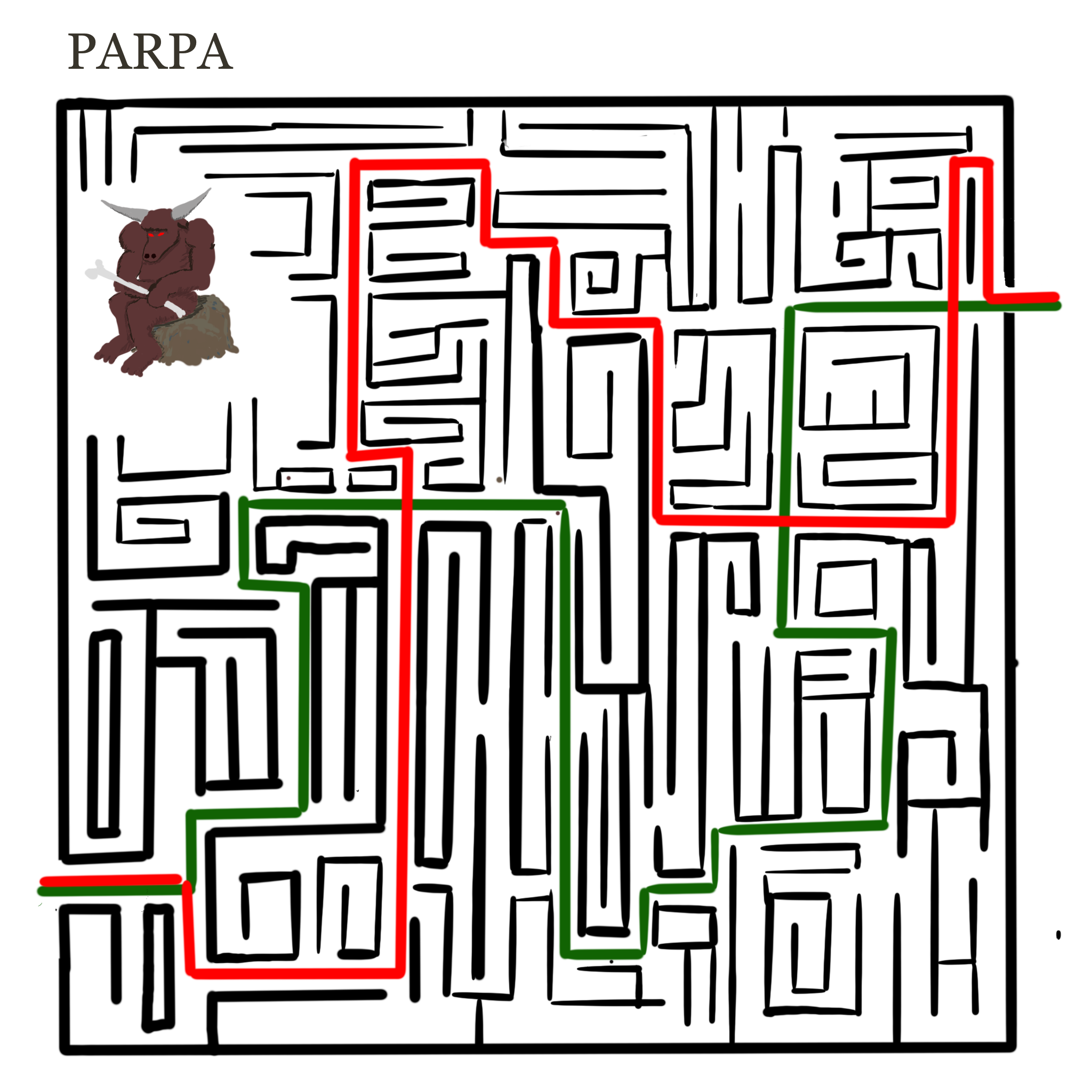

Institutional Design Is Navigating an Idea Maze

Navigating an "idea maze" is a useful analogy for a process that involves a series of hard-to-reverse decisions under uncertainty. This piece describes many potential junctures that someone building a DARPA-riff will probably face, how to think about them, and which fork to take.

Indeed, the entire process of reading the document might resemble a maze. You can follow each intellectual pathway that led to each conclusion. And, like a maze, I encourage you to chart your own course through the document, jumping to sections that seem most intriguing or controversial!

("Guided tours" are available that highlight material catered to various types of readers. Are you a builder? An observer? A scholar?)

If you prefer to view the project in its most concise format, a two-pager is available here. This has all the nuts and bolts needed to get building. However, if you're curious and want to follow each weave of "Ariadne's thread," please read on.

Who is this piece for?

This piece is intended for five types of readers. Selecting a persona will create a "guided tour" for that persona, highlighting the sections that are hopefully of most interest and value.

- "The builder" : You already believe in the importance of creating new innovation organizations and want to take action on that belief.

- "The supporter" : You want to support new innovation organizations with your social or financial capital. You might be a philanthropist, a member of a government, or a capitalist — an investor seeking monetary returns or the representative of an organization looking for technological returns.

- "The enthusiast" : You're interested in the question: How do we enable more creative science and technology in the world? But perhaps you aren't in a position to be a builder or supporter ... yet.

- "The scholar" : You have an academic interest in innovation and how it works. Maybe you're a historian, economist, or policy wonk.

- "The observer" : You're a curious person who enjoys learning about the world and encountering new ways of thinking. Perhaps you have a professional connection to the world of research and emerging technology ... or perhaps not.

If none of these categories fit, or the associated guides are insufficient, you can always chart your own adventure (This will eliminate the "guided tour" highlighting.)

Preliminaries

Purpose

This piece is a combination of three components: a specific organization's design; a broad proposal for a new organizational structure and an associated "research" agenda; and a synthesis of a gestalt of institutions, incentives, history, and theory that stabs at the questions "How does the process of creating new, impactful knowledge and technology work?" and "How can we do more of that better?"

It's traditional to separate the three roles this document is trying to play — analytical synthesis, broad proposal, and specific organizational design. However, in this case, they are too intimately entangled. Separating any one of them would force me to either leave glaring gaps or project an unwarranted level of certainty. The synthesis clearly drives the broad proposal as well as specific organizational hypotheses. A large part of the research agenda is inseparable from the specific organizational design itself — from "How does money work in a DARPA-riff?" to the hypothesis that good simulations could be the "Why now?" of a program design discipline. At the same time, one of the (admittedly grand) goals of the specific organization is to shift some of the conclusions in the synthesis; coupling the synthesis to the other parts allows us to say not just "Here is how the world is" but also "Here is how it could be."

An important goal for this piece is to argue for the existence of a new organization in the first place. Creating an organizational structure around a project prematurely can have many downsides, so the burden of proof rests heavily on the argument that you can't accomplish what you need to do without an "official" organizational structure. I will argue that there are a number of experiments that seem like they can only be done in the context of a new organization, and that they comprise some of the most interesting questions that the broad proposal wants to explore.

Calling out and exploring unavoidable tensions is one of the piece's core themes and an important role. Innovation organizations are shot through with Straits of Messinas, with Scylla waiting to snatch you if you focus too far on one side of a trade-off and Charybdis ready to suck you to your doom if tack too far the other way. As we will see, these tensions often have to do with incentives. With these tensions, the only way to avoid a messy fate is to first know the upcoming danger and then sail the narrow path between them, which will always be uncomfortable and require constant course correction. These tensions manifest even in the piece's very existence: If you think you have good ideas, you should show that by acting on them; at the same time, propagating the ideas can also be valuable if it enables other people to act on them. But acting on ideas and explaining them are often at odds! These conflicting prerogatives are especially true in this context where we need an explosion of new models, not a single organization. The piece's ultimate goal is to affect change, but the nature of the beast is such that I suspect neither generalizable knowledge nor a specific organizational design will be sufficient on its own. Normally, people end up on one end of the spectrum or another — either publishing a policy proposal or trying to lead by example (which doesn't even produce an artifact).

"Om nom nom" —Scylla and Charybdis

"Om nom nom" —Scylla and Charybdis

Finally, this piece is meant to build trust. Doing anything new requires trust, and research requires more trust than other disciplines. Many of these ideas don't have a "closed form" solution or a right answer, so the only way that I can convince you I've come to a reasonable conclusion is by walking you through the process of getting there. Hopefully, I can build that trust by showing you that I've really done my homework and walking you through the thoughts behind the actions. There are many uncomfortable truths and icky trade-offs embedded in good solutions R&D. Staring them in the eye requires trust in addition to raw logic.

Housekeeping

Links. Whenever I lean on an argument I make elsewhere in the piece, I try to use internal links that look like this (which will take you right back to this section). Don't worry about losing your place, because clicking an internal link will make a link back to the section you jumped from appear in the upper right corner. External links look like this one that actually links back to this piece. I try to use them only when it's painfully obvious what they link to, usually the name of a piece I'm referencing directly in the text.

Guided tours. This piece is long, and everybody will get more or less value from different sections. Selecting a persona in the section above will highlight the headings of some sections in the table of contents and gray out the headings of others in a "recommended path" for that persona. It won't make anything disappear. You can get rid of the highlighting by choosing Chart your own adventure.

Money notations. When I refer to historical monetary values, I've converted them into 2021 dollars, as indicated by, e.g., $(2021)1B for $1B in 2021 dollars.

Footnotes and sidenotes. This piece has both sidenotes and pop-up footnotes. Sidenotes are for external references to expand on a topic, while footnotes are for extraneous asides — you will lose nothing by not reading them.

Playfulness. This piece deals with a serious subject (to me, it is one of the most serious subjects). It's easy and often expected to take a grim authoritative tone to convey seriousness. Yet examples from Einstein's light beam to Feynman's plates and Shannon's unicycle suggest that playfulness not only does not get in the way of good work, it may be an ingredient. With that in mind, I've allowed myself to be a bit playful and irreverent at times, and hope that doesn't diminish the piece's seriousness in your eyes!

Institutional design is navigating an idea maze

Navigating an "idea maze" As far as I can tell, Balaji S. Srivasanan introduced the idea maze in his Stanford Startup Course , and Chris Dixon made it well known on his blog . is a powerful analogy for a process that involves a series of hard-to-reverse decisions under uncertainty. Idea mazes usually show up in the context of startups and the decisions an entrepreneur navigates to create a company — "Should we sell to businesses or consumers? Build a web application or an iPhone app first?" And so on. Despite being associated with startups, there is nothing about the analogy of an idea maze that restricts it to a particular domain; it can also be used in engineering, research, or, in this case, building new institutional structures.

Image Credit:

Balaji S. Srinivasan

Image Credit:

Balaji S. Srinivasan

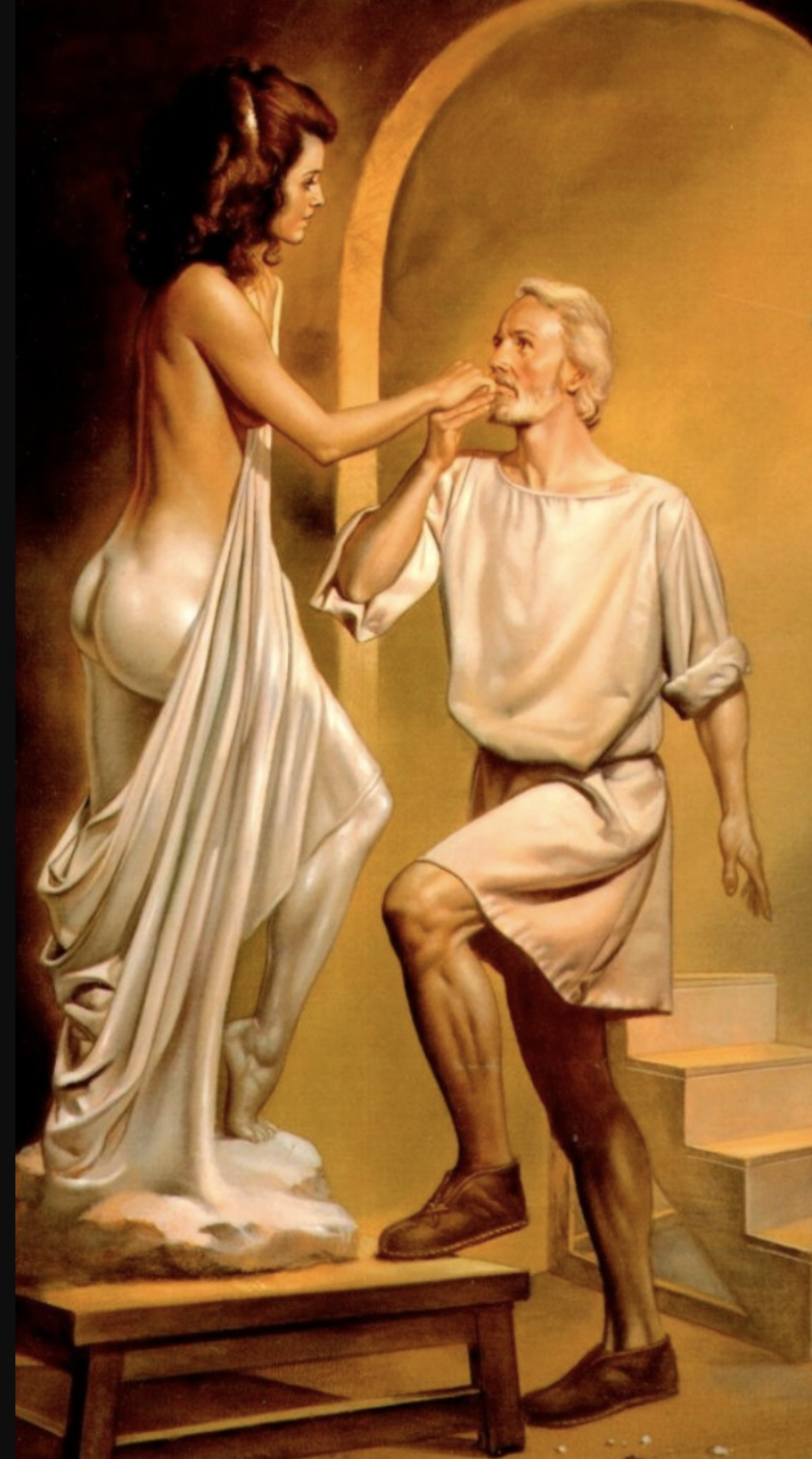

Both abstract and concrete ideas are essential for talking about institutional structures; extending the analogy of the idea maze to the full myth of the Cretan Labyrinth can act as intellectual glue between the two. On the one hand, it's important to talk about why a new structure is even necessary and sweeping considerations that apply regardless of specifics. This is like looking at the maze from the outside — Where are its boundaries? Why does it exist? What dangers lurk within?At the same time, it's equally important to talk about gritty implementation details. These are the junctures in the maze and how you know that you've reached them. The abstract considerations are important for enabling people to come to their own conclusions, but without the details, it's easy to leave the difficult tradeoffs as frustrating "exercises for the the reader."

No, not that labyrinth.

No, not that labyrinth.

This piece has a three-part structure that addresses increasingly concrete questions. The first question it strives to answer is: Why does the maze exist and where are its boundaries? The second question is: What is the layout of the maze? And finally: What is the specific path we will try to chart through the maze?

Why does the maze exist and where are its boundaries?

When your goal is to navigate a maze, it's easy to treat it as a fixture in the world and ignore what it looks like from the outside and its history. But mazes exist for reasons. Don't forget that King Minos had the Labyrinth built in order to contain the minotaur! Describing the boundaries of the maze in the context of institution building is about understanding the scope of what we're setting out to do and the constraints in the world that shape that scope. The history lets us know what's lurking inside to kill and eat us.

The exterior of the maze is fractal — every time you zoom in on it, there are more interesting details to explore. As such, it's easy to fall into the trap of focusing only on the contours of the questions "Why does this problem exist?" or just "Is there a problem?" Answering these is a worthy goal, but it is not our goal. I spend a good chunk of the piece describing the exterior of the maze and why it exists, but only insofar as I feel it's useful for hypothesizing about the maze's interior and the path through it. This approach will frustrate some of you for its lack of rigor and others for its extraneous information. Not all sections are for everybody!

What is the layout of the maze?

The interior layout of the maze corresponds to the myriad decisions facing anyone who is trying to build an organization to tackle a specific niche. The layout of the maze — with its junctures, turns, and dead ends — is impossible to know for sure before you enter it. Even then, you'll only be sure of the junctures and distances you've actually traversed, and only if you keep careful track of them. However, you can sketch possible layouts. This sort of hypothesizing is important and underdone, especially in public, in large part because there is not just one path through the maze! In addition to explaining which decisions I think are correct, I want to both encourage other adventurers to enter the maze and equip them as well as I can.

It's also just good practice to lay out your hypotheses about not just what experimental results will be but why they will happen. Abstractly describing decision points is admittedly a bit of a hedge! Even if our specific implementation fails, I hope that it doesn't stand as a condemnation of the entire model.

I'm going to spend a good chunk of the piece describing the potential junctures that someone building a DARPA-riff will probably face and how to think about them before explaining which fork I think is correct.

What is the path that we hope to chart through the maze?

Finally, there is the actual path through the maze. Any organization can only follow a single golden thread, making one specific design decision at each juncture. I'll lay out which choices I think are correct given the picture of the maze I've constructed. This is the most concrete part, and also the most likely to be wrong or need to change. It has been nerve-racking to write for two reasons. First, concretely stating "I think doing this will work" opens you up to the terrifying possibility of being provably wrong. When you make general statements about the world, you can always point to qualifiers about why an example does not count as a disproof. Not so when you say, "I am going to try to do X and expect Y to happen." The second reason that laying out a specific path is nerve-racking is because people tend to evaluate ideas on their most concrete manifestations. So, in the same way that people accept entire ideologies on a few compelling anecdotes, they are likely to reject the entire path, or possibly the entire argument I've laid out, based on one dumb choice.

For some readers, this will be the most interesting part — concrete, actionable plans. For others, this will be the most boring.

Different disciplines usually focus on one part of the maze at a time. Historical and economic work usually focuses only on why the maze exists and its boundaries. Policy proposals usually focus on the layout of the maze. Entrepreneurs usually focus on specific paths through the maze (but write about them only in retrospect). Chesterton's Fence Generally, things are done the way they are for a reason. suggests that focusing on one part at a time is probably good practice, but in this specific situation, it feels like each piece would be weak without the others. Describing the state of the world would just be adding to a growing pile of stagnation literature if it weren't supporting action. Without the context provided by the boundaries, the maze's layout would be hard to evaluate, and without the specific plan, it would feel like yet another call for someone to do something! And without the boundaries and layout, it would be impossible to evaluate a proposed path beyond "Well, that seems smart/stupid" or to have a discussion about how it could be improved from a common framework.

Part I: Problems and Possibilities - The Boundaries of the Maze and the Minotaurs Within

In this section, I want to convince you that:

- In the past, industrial labs filled a particular niche in the innovation ecosystem.

- Especially in the world of atoms, industrial labs no longer fill the niche they did in the early-to-mid 20th century.

- The niche industrial labs once occupied still needs to be filled, and we should not expect organizations that look like industrial labs to fill it.

Overlapping institutional constraints rule out several classes of creative work

Different institutions are each good at enabling certain sets of activities that we associate with "innovations': Academia is good at generating novel ideas; startups are great at pushing high-potential products into new markets; corporate R&D is unparalleled for improving existing product lines. Together, the institutions that play a part in creating new knowledge and technology make up an "innovation ecosystem." Both the terms "innovation" and "innovation ecosystem" are often frustrating suitcase words. But it is useful to have a shorthand for "all the institutions that enable activities that collectively cover the process of an idea becoming a new impactful idea or technology." The organizations that make up these institutions vary wildly, but they share enough commonalities that it's worth collectively referring to them as "innovation organizations.'

Obviously, each institution can't excel at every type of activity. Each institutional structure has some set of constraints that prevent it from engaging in certain activities well or at all. Many factors shape these constraints, and they are effectively synonymous with "incentives." You could think of each institution occupying some area on a map of all possible activities — the institution is well suited to tackle the activities it covers and poorly suited to tackle activities at the edge or outside of its area. Some activities are covered by multiple institutions, but some aren't covered by any institutions. These activities are "constrained out" of happening by existing institutional structures. For example, unsexy long-term 4 projects that won't necessarily produce a product or novel papers are simultaneously beyond the scope of venture-funded startups, corporate R&D, philanthropy, and academia. Venture-funded startups could support either a shorter timeline or more product focus; corporate R&D would want the activities to be sexy or product-focused; philanthropy would want them to be sexy; and academia would push for novelty and papers.

Of course there are counterexamples of projects with those characteristics that have been supported by existing institutional structures! It will always be possible to make arguments like "SpaceX exists, so the innovation ecosystem is fine." Antibiotics are pretty great, even though people can sometimes survive infections without them. Similarly, it's worthwhile to try to enable more constrained activities, even if a few projects make it through.

Institutionally constrained activity is a useful and precise way to think about the vibe that the world could be on a more wonderful trajectory than it's on right now. 5

Instead of arguing over The Great Stagnation See Tyler Cownen's The Great Stagnation: How America Ate All The Low-Hanging Fruit of Modern History, Got Sick, and Will (Eventually) Feel Better and a lot of related literature. or lamenting the fact that some combination of research, academia, science, and physical innovation is broken, looking through the lens of institutional constraints enables us to talk specifically about which classes of activities we expect to be happening that are missing or anemic. We can then ask what institutional constraints are creating those gaps. Instead of asking, "Where's my flying car?" See J. Storrs Hall's Where Is My Flying Car?: A Memoir of Future Past . we should be asking, "What activities would enable flying cars, and what institutional constraints are stopping them?" 8 This analysis can in turn help us address those gaps by pointing to concrete ways to adjust incentives in existing institutions or new institutions with different sets of incentives one could create. Some important questions to ask are: What incentives are preventing institutions from enabling these activities? Should I be shifting incentives within an existing institution or building a new one? How do I keep a new institution from falling into the same incentive traps?

Looking at the innovation ecosystem through this lens suggests that there are many different activities that we lump together under the umbrella of "research" or "innovation," and many ways they could be improved. There are infinitely divisible multitudes, but I will briefly note three clusters of them, primarily because they are frequently conflated and I explicitly want to say, "This piece is about one of these but not the other."

One cluster where things could be better is what I might call "breakdowns in the scientific process." Here you see issues like the replication crisis and science being judged not on one of several scientific epistemologies but on politics and its ilk. For more on breakdowns on the scientific process, I recommend Brian Nosek's work on the replication crisis or Stuart Ritchie's book Science Fictions .

Another area for improvement is in enabling paradigm-shifting work. It's a bit of a trope, but there is something in the fact that someone from 1920 would barely recognize the built world in 1970, but someone from 1970 wouldn't be too surprised by today's cars, planes, and buildings or their capabilities. The built world hasn't experienced many paradigm shifts. Similarly, physicists are still working on string theory 50 years later, and despite incredible advances in biology, nothing has displaced the structure and centrality of DNA as the dominant biological paradigm. There are legitimate arguments that this narrative ignores less visible paradigm shifts, like those in computing, or the fact that the late 19th to mid 20th century may have been a massive outlier in the realm of physical innovation. I don't think these arguments are incompatible with the assertion that we could do better.

You can roughly divide paradigm-shift-enabling activities into two distinct modes. The first mode is paradigm-shifting science. Here the sentiment is that "Einstein would be stuck in the patent office." We don't seem to have a healthy system of unfettered research that is producing paradigm shifts in how we understand the world, like those seen up through the 1950s or '60s in everything from quantum chromodynamics to general relativity and the double-helix structure of DNA. The second mode is paradigm-shifting engineering. "Where's my flying car?" We don't seem to be able to build new systems that radically change our physical capabilities. The line between science and engineering is of course nebulous, porous, and full of feedback loops.

Distinguishing between these areas isn't just a semantic exercise — it's important for enabling action. While they share many similarities and causal links, each area prioritizes different activities and mind-sets. As a naïve example, paradigm-shifting science probably depends on monomaniacal individuals running an idea to ground over the course of sometimes decades, while that same mode would be detrimental to paradigm-shifting engineering because it requires more pragmatism and coordination. Think Einstein and general relativity vs. Polavision, Polaroid's failed home-movie system. Polavision took 10 years and half a billion dollars to make. In Loonshots , Safi Bahcall attributes its failure to expensive but amazing features driven by Polaroid's founder. It's important to address each of these areas, but there needs to be a division of labor. Despite the temptation to do all the things, "fixing research" is going to require many new institutions (that I suspect will not scale) to address different niches in constraint space. Additionally, legible niches can help prevent mimetic infighting over who is going to "save science." Given all of this, enabling more paradigm-shifting science is incredibly important, but it won't show up in the rest of this piece as we turn our focus to the niche in the innovation ecosystem that enables paradigm-shifting engineering.

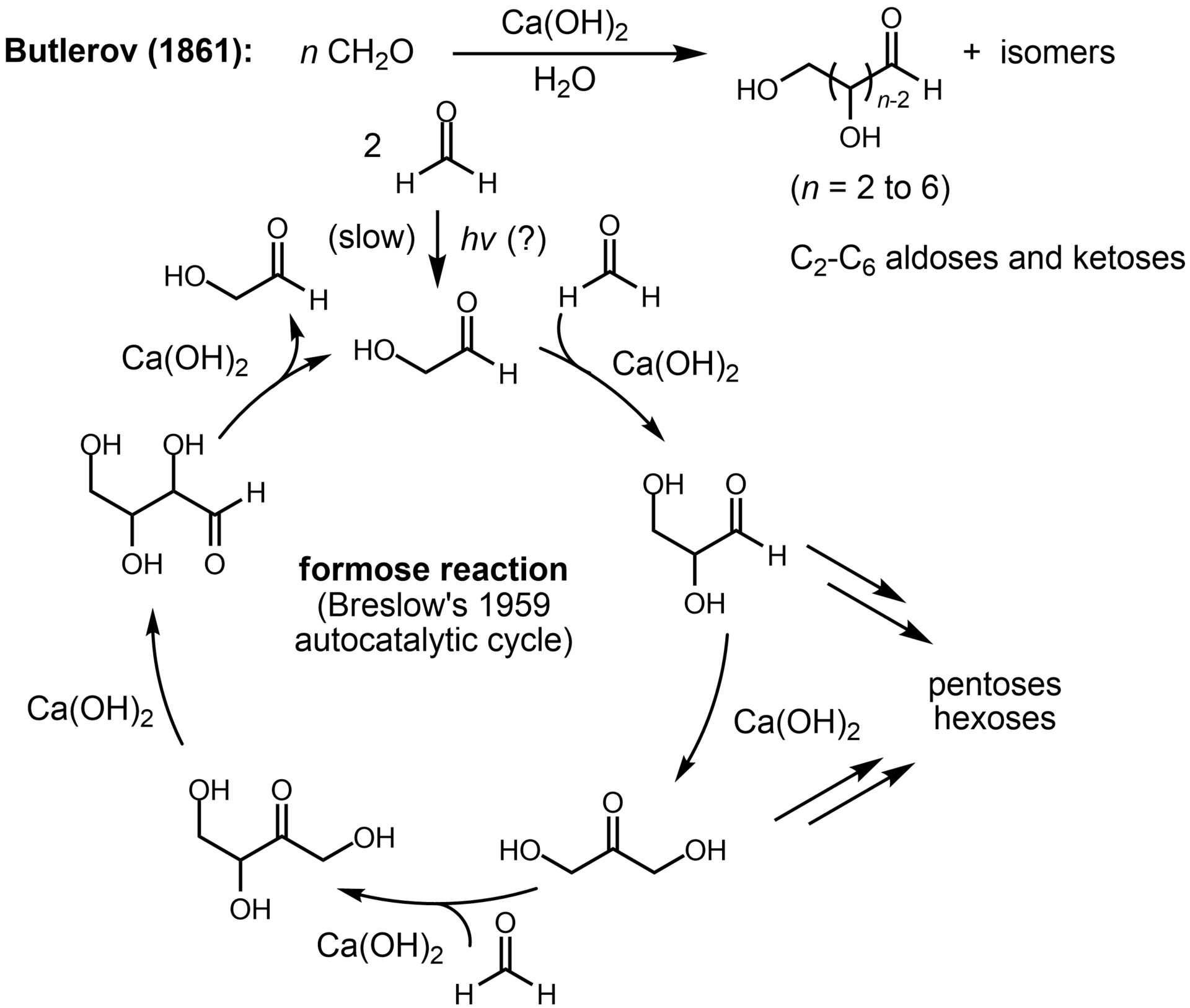

Feeling around the edges of solutions R&D

How should we think about the bundle of activities that enable paradigm-shifting engineering? The bundle is incredibly nebulous — absolutely real, but hard to capture, fuzzy-edged, and context-dependent. There is clearly something that is more focused on products and outcomes than the exploration of nature we associate with Newton or Einstein, yet at the same time doesn't have the steely eyed focus on commercial products we associate with Edison or Jobs. Invoking these contrasting historical figures brings to mind Donald Stokes's concept of Pasteur's Quadrant; See Pasteur's Quadrant: Basic Science and Technological Innovation . Stokes captures the mind-set excellently but doesn't focus on the actual institutional activities or how to enable them. Closer to the mark, DARPA director Arati Prabhakar captures the spirit of what we're striving for in her description of "Solutions R&D":

Solutions R&D weaves the threads of research from multiple domains together with lessons from the reality of use and practice, to demonstrate prototypes, develop tools, and build convincing evidence. Because it reaches into and connects all the parts of the innovation system, solutions R&D is a powerful way to ratchet the whole system up faster, once some initial elements of research and implementation are in place. Doing it well takes a management approach that combines a relentless focus on a bold goal with the ability to manage the high risk involved in creative experimentation. From " In the Realm of the Barely Feasible ."

"Solutions R&D" is certainly a great term for the niche we're interested in. We don't have to keep referring to it as "that bundle of activities that enables paradigm-shifting engineering" (though it would lead to an impressive word count). But like other nebulous things, such as clouds, science, and porn, a name doesn't do much to further understanding the important properties of the activities we're talking about, why they're not as common as we'd like, and how to change that.

Perhaps frustratingly, the best strategy for getting a handle on nebulous but very real things is not to rigidly define them but to "feel around the edges." People are terrible at describing what they want given a blank slate, but we're pretty good at knowing what we want when we see it. Considering what triggers the "Yes, that!" for systems R&D, we consistently arrive back at the industrial labs of the early-to-mid 20th century and Bell Labs in particular.

In the past, industrial labs filled a particular niche in the innovation ecosystem

Solutions R&D is nebulous, but we can get a sense of it by feeling around the edges and looking at what sorts of things happened at great labs that don't seem to be happening now. While industrial labs (including Bell Labs and PARC themselves) still exist, they no longer fill the niche they did in the early-to-mid 20th century . Understanding what changed and why is part of understanding the niche more broadly. While the early-to-mid 20th century was home to many well-regarded industrial labs For more about why industrial labs started, see " The Changing Structure of American Innovation : Some Cautionary Remarks for Economic Growth." , like GE Laboratories (f. 1900), DuPont Experimental Station (f. 1903), and Kodak Research Laboratories (f. 1912), Bell Labs (f. 1925) stands head and shoulders above them in the pantheon. Most of the properties and important activities in this section are drawn primarily from accounts of Bell Labs, supplemented by accounts from other labs. This Bell Labs focus is admittedly in part because of the streetlight effect — people have chronicled Bell Labs much more extensively — but also because Bell Labs" output was such an outlier. I feel comfortable doing this because, unlike the idiosyncratic success of individuals or startups, there appears to be a consistent set of activities shared across industrial labs that are missing today.

The trick here is to distinguish between the "universal" characteristics of the activities that enable good solutions R&D and the particular set of tactics and strategies that Bell Labs used to implement those characteristics. It's important to distinguish between the two because we might need to use different tactics to achieve the same characteristics in a new, 21st century context. Blindly following tactics regardless of context is ineffective and cargo-culty.

I've identified nine characteristics that enabled industrial labs to fill the solutions R&D niche .

- Industrial labs enabled work on general-purpose technology before it specialized.

- Industrial labs enabled targeted piddling around, especially with equipment and collaborators who would not otherwise be accessible.

- Industrial labs enabled high-collaboration research work among larger and more diverse groups of people than academia or startups.

- Industrial labs enabled smooth transitions of technologies between different readiness levels — they cared about both novelty and scale.

- Industrial labs provided a default customer for process improvements and default scale for products.

- Industrial labs often provided a precise set of problems and feedback loops about whether solutions actually solved those problems.

- Industrial labs provided a first-class alternative to academia in which people could still participate in the scientific enterprise.

- Industrial labs enabled continuous work on projects over a six-plus-year time scales. The work to create the transistor took eight years (because of a gap created by World War II) and making it mass-manufacturable took another eight.

- Industrial labs enabled work in Pasteur's Quadrant Pasteur's Quadrant is an excellent exploration into the nuances of different types of research. — explorations into natural phenomena with an eye toward exploiting those phenomena for human flourishing (and profit).

Exceptions abound, of course. Non-industrial lab organizations exist today that embody many of these characteristics, and not all golden-age industrial labs did all these things all the time. I'll expand on some (but not all) of these points below.

Industrial labs enabled work on general-purpose technology before it specialized

Specialization has a complicated relationship with new technologies. New technology will never be as good as old technology along every dimension. 15 This failure of pareto-superiority is why frontier technologies need to start in niche markets where they are especially valuable. It's rare that a technology can do well in a niche market without some specialization. If you imagine the straightest path that a technology can take to its most powerful or general use, 16 the specialization work to fit into niches can require larger or smaller diversions from this path. These diversions take the form of everything from technology development to marketing and building sales channels for a company. Going from niche to niche is essential, but you can also get stuck in a niche or jump onto a different development path altogether. Say what you will about him, but Elon Musk is the master of charting paths between niches: roadster → high-end sedan → mass-market car; NASA-subsidized LEO rocket → GEO rocket → reusable GEO rocket → Starship. More time and resources can enable you to pick an "optimal" sequence of niches or search around for yet-unknown niches instead of being forced to hop into the nearest or most obvious ones. More abstractly, general purpose technology needs developmental slack. See " Studies on Slack ." In other words, if you imagine decoupling from market discipline as cave diving , industrial labs acted like extra oxygen tanks.

Industrial labs" work on unabashedly general-purpose technologies stands in contrast to modern startups. I don't know how often I've seen a presentation from a grad student or professor that goes something like, "We've invented this incredible technology that could potentially do [amazing thing]; however, it will take a lot of work to get there, so we're starting a company to build a product to do [kind of pathetic thing that will still require a lot of specialization and company-building] in [domain totally unrelated to [amazing thing]]." While I'm usually sure it's doomed to failure, it's unfortunately the best move, given history and the constraints on startups. First, many successful startups do look like they started in a tiny, scoffable niche — Tesla (weird rich people), NVIDIA (gamers), PayPal (Beanie Babies on eBay?), Apple (hobbyists), Twitch (life livestreaming), Amazon (books) ... the list goes on. However, each of these weird niches didn't require huge diversions from the critical path. 18 Second, "start in a niche" is folk startup wisdom for a reason . History is littered with the corpses of startups that were going to be the next platform or general-purpose technology as soon as they launched. General Magic, NeXT, Quibi, Atrium, Rethink Robotics, and Magic Leap. Many others that started with grand ambitions were forced to crawl into a niche to survive — I won't name names here. At the same time, startup investors want to see massive potential returns. "Find a compelling story about how a niche becomes a billion-dollar market" puts a technology between a rock and a hard place. 19

In most cases, it wouldn't have been feasible to do the necessary work to find or develop for a better niche in an academic setting either. While that work still requires "research," it also requires focus and systems engineering, both things that academia does not support for a slew of reasons .

Of course, it's possible that I and others just overestimated the potential of many of these technologies. However, the examples of technologies that were massively impactful only after "hanging out" in an industrial lab for many years would seem to argue otherwise. The transistor, public key cryptography, Pyrex, solar panels, and the graphical user interface (and personal computing in general) come to mind. Industrial labs provided the environment to do the work to get a technology to a point where it was viable for a "useful" niche, and enable it to find that niche in the first place.

In addition to giving projects longer time scales, less existential risk, and larger budgets than most startups, there are several specific ways that industrial labs helped technologies find good niches that startups and academia don't provide.

When you're still trying to figure a technology out, it's not clear which skill sets you want in the room. Industrial labs facilitated people floating between different projects loosely creating and breaking collaborations. Bell Labs was particularly good at enabling these free radicals:

"The Solar cell just sort of happened," he [Cal Fuller] said. It was not "team research" in the traditional sense, but it was made possible "because the Labs policy did not require us to get the permission of our bosses to cooperate—at the Laboratories one could go directly to the person who could help." From Jon Gertner's The Idea Factory

To get the same effect at a startup, you need to either find a magical individual who has gone deep on multiple areas (an M-shaped individual instead of T-shaped individual?) or hire people who might ultimately turn out to be useless. Startups don't have the slack for this.

Another dividend from industrial labs" slack is the room to notice something unexpected, say, "Huh, that's funny," and run the anomaly to ground. Perhaps the most famous slack dividend is the discovery of the cosmic microwave background when Arno Penzias and Robert Woodrow Wilson were using the Holmdel Horn Antenna to try to do satellite communication experiments. They noticed persistent noise in their measurements that didn't seem to respond to recalibration or cleaning. Eventually, they got in touch with theorists who connected the noise they saw with predicted echoes of the (still very hypothetical) big bang. In a lower-slack (more "efficient') organization, Penzias and Wilson wouldn't have had the bandwidth to dig into the mysterious noise beyond determining that it didn't seem to be fixable.

Modern corporate R&D has become worse at enabling work on general-purpose technology. Bell Labs had a culture that was almost the polar opposite of the "demo or die" pressures in many modern organizations. The need to produce demos for corporate higher-ups may create the same pressure to specialize into a niche that makes startups bad places to develop general-purpose technology. One way to look at this would be to ask: How has the management of corporate R&D changed over time?

Industrial labs enabled targeted piddling around

It was effortless. It was easy to play with these things. It was like uncorking a bottle: Everything flowed out effortlessly. I almost tried to resist it! There was no importance to what I was doing, but ultimately there was. The diagrams and the whole business that I got the Nobel Prize for came from that piddling around with the wobbling plate.

—Richard Feynman, Surely You're Joking, Mr. Feynman

So many stories of golden-age industrial labs revolve around researchers literally just trying out a lot of stuff. "Hey, we came up with this new chemical, what's it useful for?" In the startup world, that's often derided as a "solution looking for a problem." Arguably, though, many important technologies started off this way — they weren't a hole-in-one solution to a problem. We don't seem to have a place for this "targeted piddling around" to happen anymore in situations that require specialized knowledge and equipment.

Disciplines where targeted piddling around can happen seem to be healthier. This contrast is stark looking at software compared to, say, space technology. Modern software (and slowly, biology) suggests that, in many cases, piddling around requires cheap, democratized technology. Industrial labs may have been able to loosen that requirement. Stories about DuPont and 3M's industrial labs always involve a lot of "just trying stuff out."

It's important to call attention to the "targeted" part of "targeted piddling around." Contrary to common perception, Bell Labs and PARC didn't give researchers free rein to work on whatever they wanted. There were relatively few milestones, and researchers had enough slack from management to explore adjacencies. However, people were explicitly asked to work on high-level goals that would benefit AT&T's continent-spanning communication system.

This targeting stands in contrast to accounts extolling how much freedom researchers had. It's almost impossible to confirm, but I suspect that researchers who portray themselves as having completely free rein in industrial labs are unreliable narrators. My hunch is that they felt like they had entirely free rein because the lab managers were good at hiring people whose interests were sufficiently aligned with the lab's goals that anything they chose to do was within some window. Another alternative is that they gave a few people free rein and the personal accounts are from the wunderkinds. Claude Shannon illustrates both of these possibilities — he was absolutely a wunderkind who was given free rein. That is, up until he started working on projects like a "mechanical mouse" that Bell Labs management couldn't possibly justify to regulators as being related to communication.

Today, many people implicitly assume that targeted piddling around happens in universities. Academics certainly do piddle around with ideas, but they're less incentivized to do it with technology applications. You can't really write a paper about how you spent a year modifying your novel technology to 100 specific applications. Even when academic labs do piddle around with technology applications, the targeting systems are often suboptimal. Often, academics are working on a specific application for a specific industrial partner, or they've effectively made up a use case out of whole cloth. This isn't to cast shade on academics, but to point out that academia is not set up to enable the sorts of feedback loops that enable just the right amount of targeting.

Getting a general-purpose technology to work is actually an iterated cycle of generality and specificity. You can think of two coupled modes: testing a general tool on many specific applications, and testing many specific components to get a general tool to work. Xerox PARC's GUI work is a good example of the former mode — they stress-tested the general system by using it for day-to-day work! Edison's workshop (arguably the OG industrial lab) did a lot of "trying stuff out," but the opposite way, where they had a general application in mind (lighting, recording, picking up voices on the phone) and just went through a thousand materials to see what would fit the bill.

The two modes are strongly coupled. While you're trying out a thousand things to figure out how to do a specific thing, you may realize that the five hundred and twenty-first thing is no good for the intended purpose, but might be amazing for another thing. However, that realization can easily be tossed out the door without the room to explore that hunch (because usually it's a hunch). Startups, academia, and 21st-century corporate R&D are rarely set up incentive-wise to enable running that hunch to ground. You do see this happen with software startups, because it is much easier to do targeted piddling around with software. Arguably, this could be one reason that we've seen less stagnation in software.

In contrast to many modern corporate R&D labs, Google Brain feels like it does the same thing. And perhaps that's why it feels like a much more "healthy" corporate R&D organization. In fact, a lot of the pure AI work feels a little bit like this targeted piddling around. Except that they actually don't take it the one step further to be an experimental product.

The Valley of Death is a concept that pops up repeatedly in discussions about technology development. For a good short article on the Valley of Death and its relationship to technology readiness levels, see " Technology Readiness and the Valley of Death ." It's nebulous and overloaded, but it always refers to a situation where there is secretly much more or different work than you'd expect between a development stage that feels "complete" and its natural next step; often, this is between a proof of concept and a prototype or between a prototype and a manufactured process. I don't find the concept particularly useful, but the thought that targeted piddling around might be the equivalent of "hanging out" in the Valley of Death feels generative. Hat tip to Michael Filler who (to my knowledge) created this intuition pump.

Industrial labs enabled smooth project ramp-ups with high ceilings

A common pattern in golden-age industrial labs was an individual or small team piddling around with something for a while, then roping in a few people part-time and, if it shows promise, going from there. Less than a dozen people were working on transistors at Bell Labs from 1939 to 1947, 23 but within two years, many dozens of people across multiple teams were figuring out how to manufacture them at scale and where they might fit within AT&T's system. Industrial labs were unique in their ability to enable research to start small and then ramp; smoothly transitioning along the technology readiness curve from promising experiment to prototype to end-user-quality product.

These smooth ramp-ups stand in contrast to both academic labs and startups. Projects can absolutely start small in academic labs, but they will hit a ceiling quickly because of both grant dynamics and publishing pressures. 24 Individual grants rarely break the single-digit-million dollar mark, which can only support a small team. Grants are hard to combine to fund a single project because most grants want to support a discrete piece of work. Notably, these grant dynamics mean that national labs and other non-university organizations that depend on grants are under the same constraints. If grants are the input to academic labs, publications are the output. Academic careers are built on citations, but the larger teams required by scaled-up projects either dilute citations or the work isn't novel enough to publish.

Small businesses don't have the same ceiling as academic labs, but it is hard for them to scale smoothly unless the work produces near-term profit. Unfortunately, some of the most interesting work precedes profits by a significant chunk of time (even if it will be profitable eventually). So, in order to pursue that work, a small business would need to transform itself into a high growth startup. Startups have the opposite problem of academia: They have a hard lower bound on scale. They're expected to grow quickly — if a startup is not constantly hiring, it's a red flag.

Ideally, the combination of academic labs and startups should enable this smooth ramping, allowing a project to pupate from academia to a startup when it's mature enough. However, there is often a gap between where you can get in an academic lab and what you need to start a startup. This is where the Valley of Death rears its head 25 again!

It's informative to compare Google Brain and (the organization-formerly-known-as-Google-)X in the context of smoothly ramping projects. Google Brain has a ton of different things being tinkered on at once, with projects of all different scales. By contrast, X famously prides itself on "killing ideas quickly." See Derek Thomson's " Google X and the Science of Radical Creativity ." The Atlantic. Hard early gating processes prevent the sort of long-burn early work that led to innovations that range from transistors to nylon; hard early gating processes are a good move for startups but perhaps squander the advantages of corporate R&D.

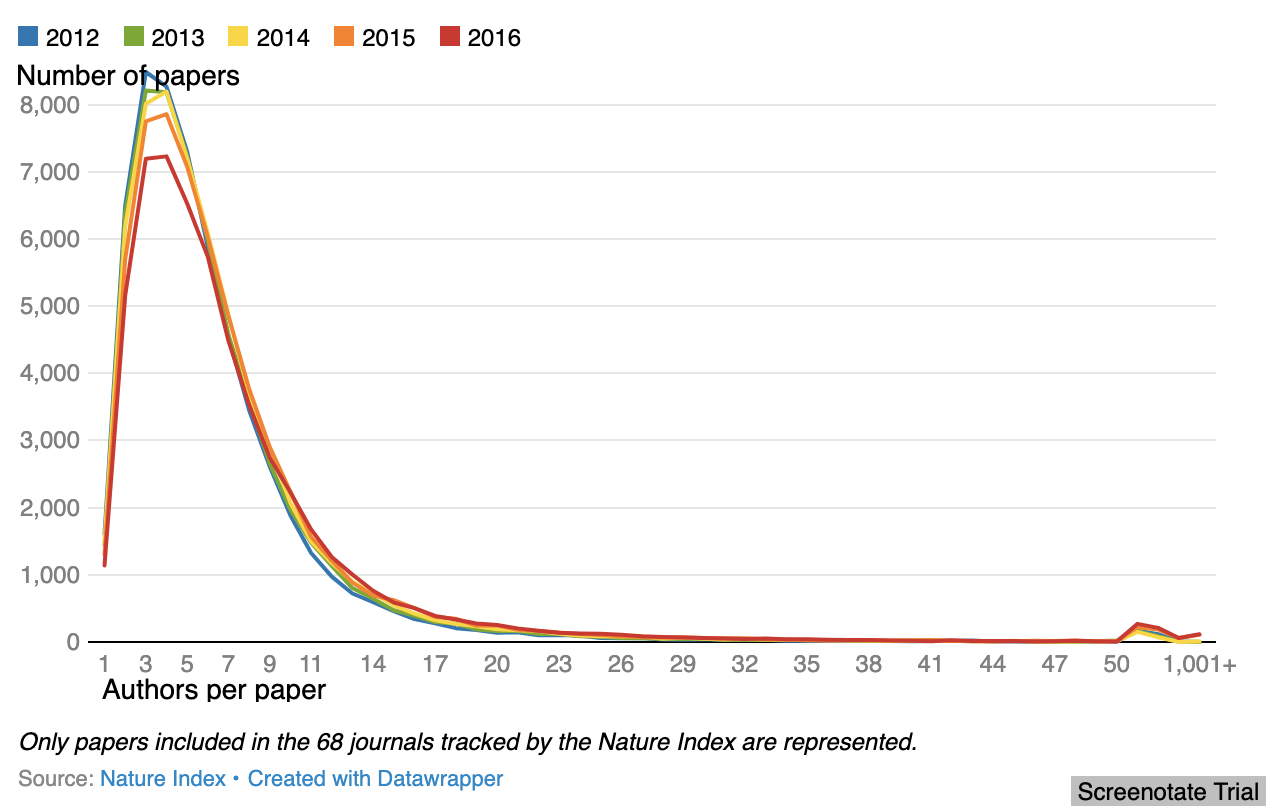

A quick research diversion! It would be fascinating to plot a histogram of project sizes at different organizations. My hypothesis is that the organizations that seem to be doing the best solutions R&D would have a nice, smooth decay — lots of little projects, and a few big projects, and many in between. I suspect that many organizations would have a hole in the middle. Maybe that's the Valley of Death appearing as a statistical phenomena.

Prototyping needs manufacturing in the room

'Manufacturing" is code for the folks who will produce the thing at scale.

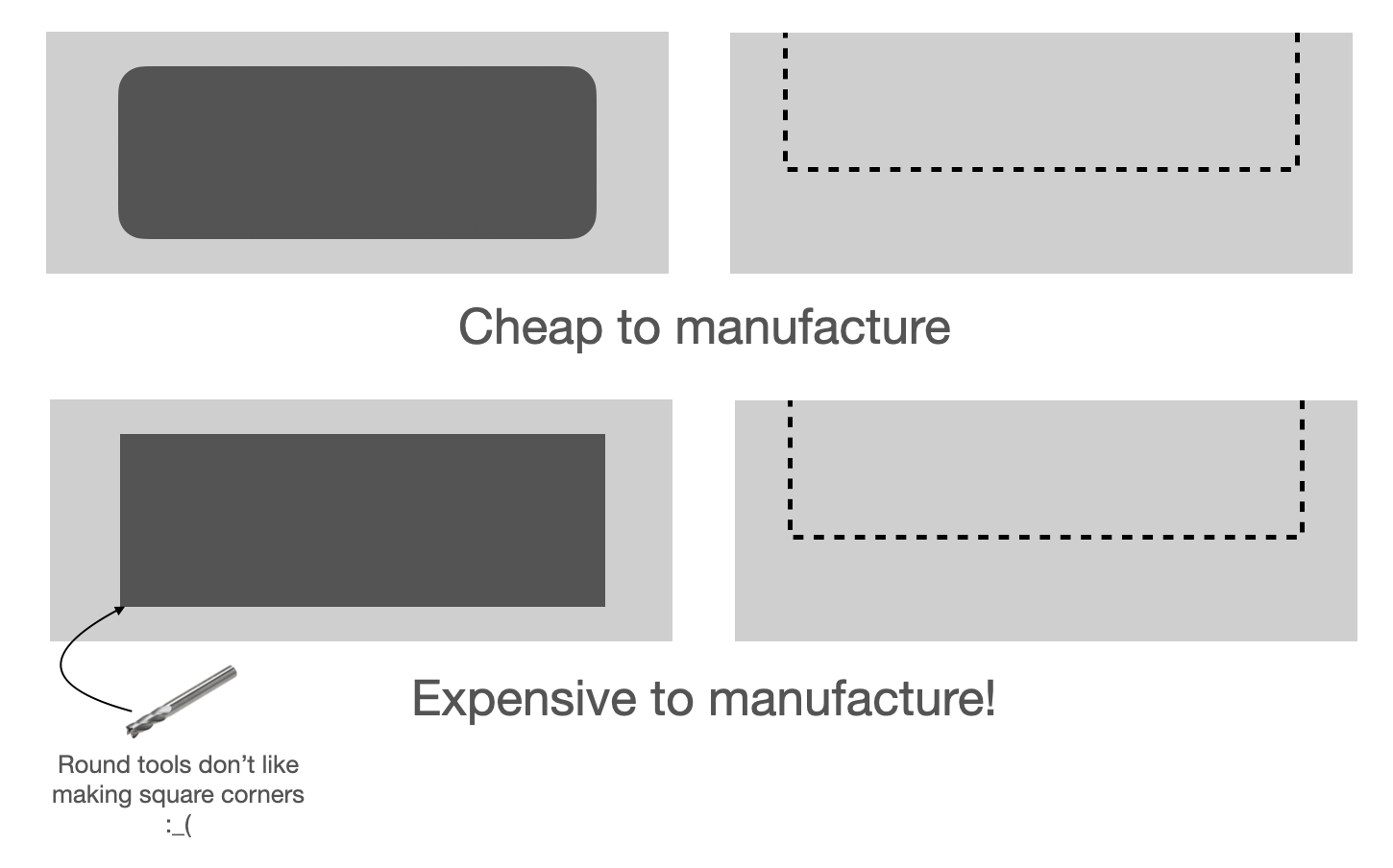

It's easy to think that if the first version of an invention works consistently, you can just turn around and make a bunch of them. However, the way something is made has a huge influence on its cost. A piece of metal that a skilled craftsperson shaped by hand is much more expensive than that same piece of metal cast in a mold. Sometimes it's straightforward to turn the former into the latter. However, sometimes you need to redesign the thing almost from scratch.

The difference between these two situations is often not obvious to someone without manufacturing experience. There are many non-obvious design choices that make scaling easier or harder. For example, perfect right-angle corners in a rectangular cutout are completely free (and the default!) in a CAD model, but they are extremely hard to machine precisely. Often, you don't actually need the interior corners of the cutout to be perfect right angles, but incorporating that tolerance requires shifting other pieces of the design to accommodate it without changing functionality. These design shifts are fairly straightforward if you make them while prototyping. However, once the entire system is roughly complete, manufacturing-focused design shifts can have cascading effects that require redesigning many other components as well. This is often what's going on when there's a weirdly long gap between a company triumphantly showing off a prototype or concept version of a product and actually putting something in customers" hands.

Not only are the impediments to scaling non-obvious, they're often tacit knowledge. Instead of a legible rule of thumb like "Avoid precise angles at the bottoms of cutouts," as in the example above, someone with a lot of experience will take one look at a design and say, "Mmm, nope, that's a bad idea." It's not like they could have given you an explicit list of dos and don'ts — most tacit knowledge is illegible, and even if you could tease it out, the list would be infinitely long.

The importance of having manufacturing in the room applies in software as well as hardware. Different implementations of the same algorithm can parallelize fine or completely break, a functional piece of code could leave giant security holes, etc.

Healthy industrial labs require a trifecta of conditions

A pattern starts to emerge when you ask: How do golden-age industrial labs differ from corporate R&D today? Corporate R&D no longer seems to have the impressive output or cachet that it once did.

The golden-age industrial labs shared a trifecta of conditions:

- They were run by a monopoly.

- They were working on a clearly high-potential technology.

- That technology addressed one or more existential threats to the company. 27

Digging in:

1. They were run by a monopoly.

More specifically, they were run by companies that were extracting monopoly rents on some high-margin product. Xerox was the only game in town for copy machines (which, believe it or not, were as essential to many businesses as Excel is today), DuPont controlled well-known materials like Teflon and Lycra, Corning had Pyrex and Silicone, and of course AT&T controlled the telephone networks.

2. They were working on a clearly high-potential technology.

The technologies the labs worked on generated strong conviction that if researchers could actually pull it off technically, the parent company would be able to sell it. "If you can replace a vacuum tube with something that uses 1000x less power, of course people would use it." "If you can create glass that won't shatter when you heat it up and cool it down, of course people would use it."

What's not clear is why so many fewer technologies seem to fall under that umbrella now. It could be that we picked the low-hanging fruit in atom land. It could be that people are just more pessimistic about what we can build. It could be that timelines have shrunk, and there is no high-potential technology on those timelines. It could be that people think in terms of products instead of technology. Or it could be that companies have scoped down, so many fewer technologies fall into "things the parent company can sell."

3. That technology addressed one or more existential threats to the company.

Conditions 1 and 2 are insufficient if the technology the lab works on doesn't address existential threats to the company. This third condition is often ignored but perhaps most important! In order for an industrial lab to have the massive impact we associate with the greats, it needs active help from its parent organization to bridge the huge gap between an invention and widespread impact. Someone needs to put in active effort to diffuse technology into the world, and if the company doesn't feel intense pressure to do the diffusion work, the technology will languish.

For companies, leveraging core organizational capabilities for a new high-margin product is one of the most straightforward and common ways that new technology can address existential threats. Products become commoditized over time and companies need to keep growing to keep their valuations high. Thus, new technology can address the existential threat of low margins and flagging growth. However! If that technology isn't in line with core capabilities, taking advantage of it would require significant organizational changes, which pose a different (at least perceived) existential threat. So only technologies aligned with an organization's core capabilities address existential threats. This core-business alignment may also have contributed to industrial labs" impactfulness beyond just enabling them to exist because a technology that can take advantage of existing manufacturing and distribution channels is more likely to be impactful.

The different dynamics of Xerox PARC's work on the laser printer and the personal printer are illustrative. While PARC is legendary for the impact of its personal computing work, structurally, most of that impact shouldn't have happened. PARC's personal computing work wasn't tied to Xerox's core business. Different technologies require different organizations, and Xerox wasn't set up to scale up and build a business around personal computing. The only reason it was impactful was because of a crazy set of contingencies — Steve Jobs's "raiding party" and Bill Gates quickly following suit.

On the other hand, the laser printer was aligned with Xerox's core business. Xerox already sold printers and copiers; the laser printer, while a revolutionary new technology, was effectively a better version of those existing products. Xerox could leverage its management structure, manufacturing apparatus, and sales channels to diffuse laser printers with relatively few alterations.

Through the lens of aligning with core capability, Bell Labs practically cheated. AT&T's core capability was the full stack of "communications," so everything from chemistry that kept telephone poles from rotting to information theory that enabled more calls to fit into each wire was actually tied to the company's core business. Transistors had the potential to integrate directly into AT&T's system and enable faster, cheaper service. Faster, cheaper service was an existential priority for AT&T, because if it didn't continue to improve, the US government would have an excuse to break them up.

I suspect that the existential threat criterion is a big factor in the difference between Google Brain and the organization formerly known as Google X. Better machine-learning technology directly ties into Google Cloud services, search, Gmail, etc. While drone delivery and autonomous cars can potentially be big businesses, they are basically orthogonal to Google's core business.

These criteria provide a springboard to briefly explore some abstract but important concepts that seem to apply beyond industrial labs to innovation organizations more broadly.

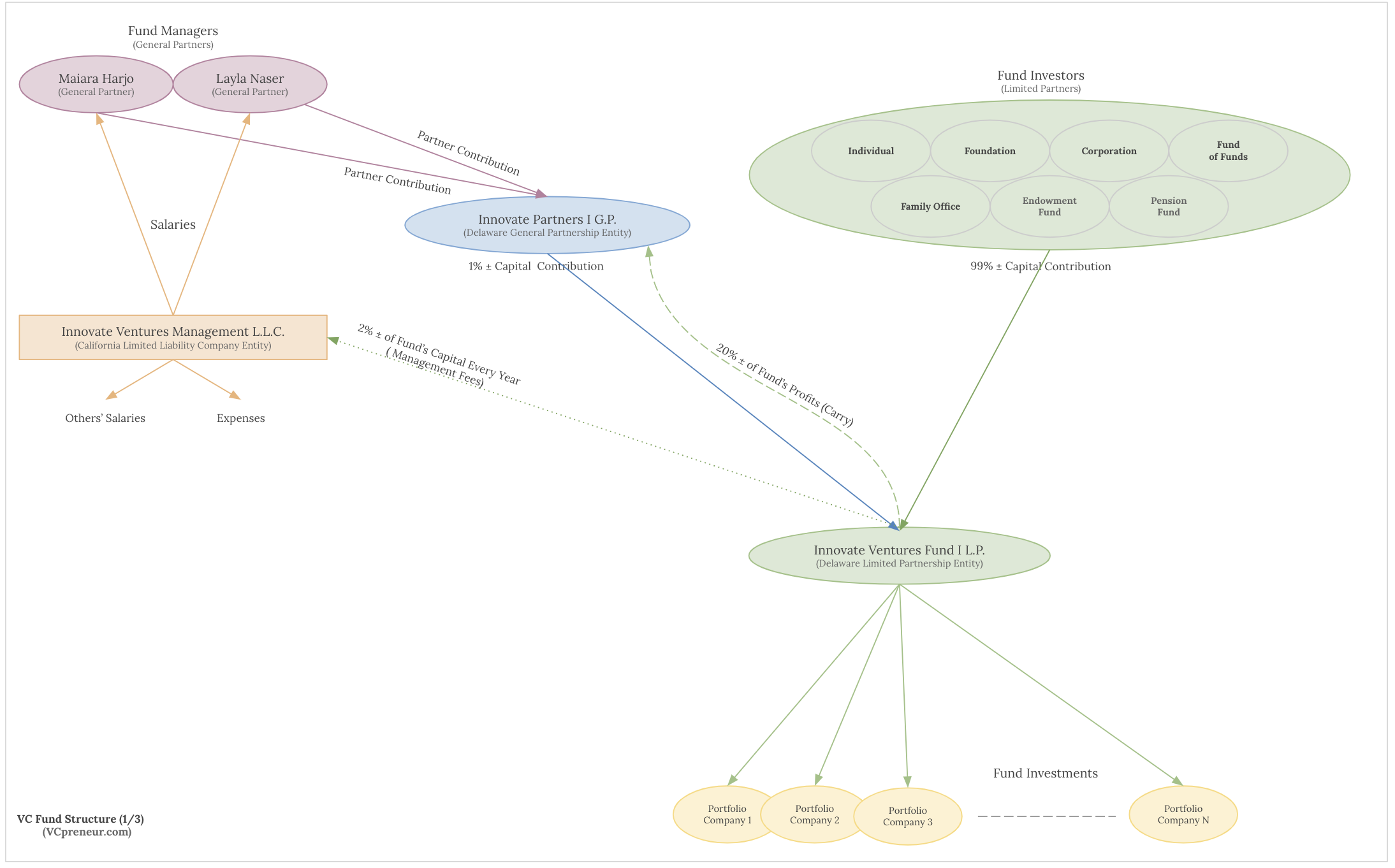

Innovation organizations need a money factory

Innovation organizations cannot depend on their outputs for free cash flow. A core part of what makes them innovation orgs in the first place is that they create things riddled with Knightian uncertainty I'm using Knightian uncertainty in its broader sense — situations where there is not only uncertainty but you don't even know what the probability distribution is or even what axis to measure it on. See Risk, Uncertainty, and Profit . that aren't necessarily products. Therefore, they need a consistent external funding source, a money factory, 29 if you will. These external sources can be anything from repeated equity-based investments to a budget from a parent org to an endowment, grants, contracts, or something else.

Don't all organizations need a funding source? Well, yes. For your standard vanilla business, that funding source is just the revenue from producing a good or service and then selling it. Its cash flow is directly coupled to its output. Many organizations use financial tools to pull those cash flows from the future to the present (with some discount). These tools introduce different levels of decoupling from outputs — from still tightly coupled short-term loans to loosely coupled equity investments. The latter case is so decoupled from output that equity investments are a common go-to money factory.

There are two major differences between innovation orgs and other organizations that force them to decouple funding from output: They have a significantly longer cycle time and much more uncertainty about their output. Additionally, that output isn't necessarily a product that can be sold directly — it might be a prototype, a process improvement, or simply something that is hard to monetize without hamstringing its impact .

Historically, it takes a long time for actually new ideas to become valuable things out in the world. 30 This development time means that even if a project is going to become incredibly valuable, the organization creating it is going to be illiquid for a long time. However, the difference between innovation orgs and other businesses is not just about time scales but also about uncertainty. Large energy and infrastructure projects can take years to start making money, but the amount of money they need to get there is fairly predictable, 31 so they can theoretically get the project done with one lump of money and don't need a consistent source of cash. In addition to uncertainty about how long it will take to pay off, new types of work differ from other long-term projects because of uncertainty about whether they even will generate a return or whether seeking that return is even a good idea if their goal isn't pure profit.

The more an organization looks like past organizations, the more accurately one can predict future performance based on a set of leading indicators. By contrast, it's not clear at all what success metrics should be when you're creating something new. Unlike other long time scale organizations, an innovation organization might never converge on a set of metrics! It's almost tautological that if it is are consistently trying to create appreciably different new things then there will consistently be new ways to evaluate those things.

Despite (and in part because of) their inherent uncertainty, innovation orgs need their funding to be stable in addition to consistent. The stability may be just as important as the amount. People's incentives go haywire if they have money for now but don't know that it will continue. It might seem that a solution would be to fund individual projects instead of an organization. Unfortunately, uncertain timescales mean that it's extremely hard to do one-shot funding for any given project. 32 Innovation organizations are divas: They want consistent cash flows for inconsistent results. Through this lens, it makes sense why many rational people are hesitant to fund them in the way that they need.

An innovation org's money factory needs to deal not just with uncertain time scales and illegible leading indicators but with the fact that a chunk of the money is inevitably going to be "wasted." History shows that people are shockingly bad at predicting which research projects are going to be wildly valuable (either in the cash or impact sense) and which are going to be duds. Complicating the matter is the fact that expectations of success or failure can feed back and either hurt or help the project. 33

Just to recap: Innovation organizations need stable cash flows to support long-term projects with significant Knightian uncertainty and illegible success metrics. Despite their diversity, these common characteristics make it useful to lump funding sources for innovation orgs together into the idea of a money factory.

Innovation orgs need to be aligned with their money factories

Aligned incentives between an innovation org and its money factory is the only way an innovation org can avoid crushing oversight and have the ability to work on long-term projects. Playing the same game is the only way people can align for extremely long time scales, so if the innovation org is going to survive long term, it needs to be playing the same game as the money factory. The alignment also needs to be clear to the people who control the money and be on a time scale that's acceptable to them. While deep research into the mysteries of the universe might be in the long-term interest of the United States government, the timescale that matters to most politicians is their term in office. One of the reasons Bell Labs was able to be such an outlier is that AT&T's government-sanctioned monopoly and purview over all things communication meant that they were truly aligned with a large range of research.

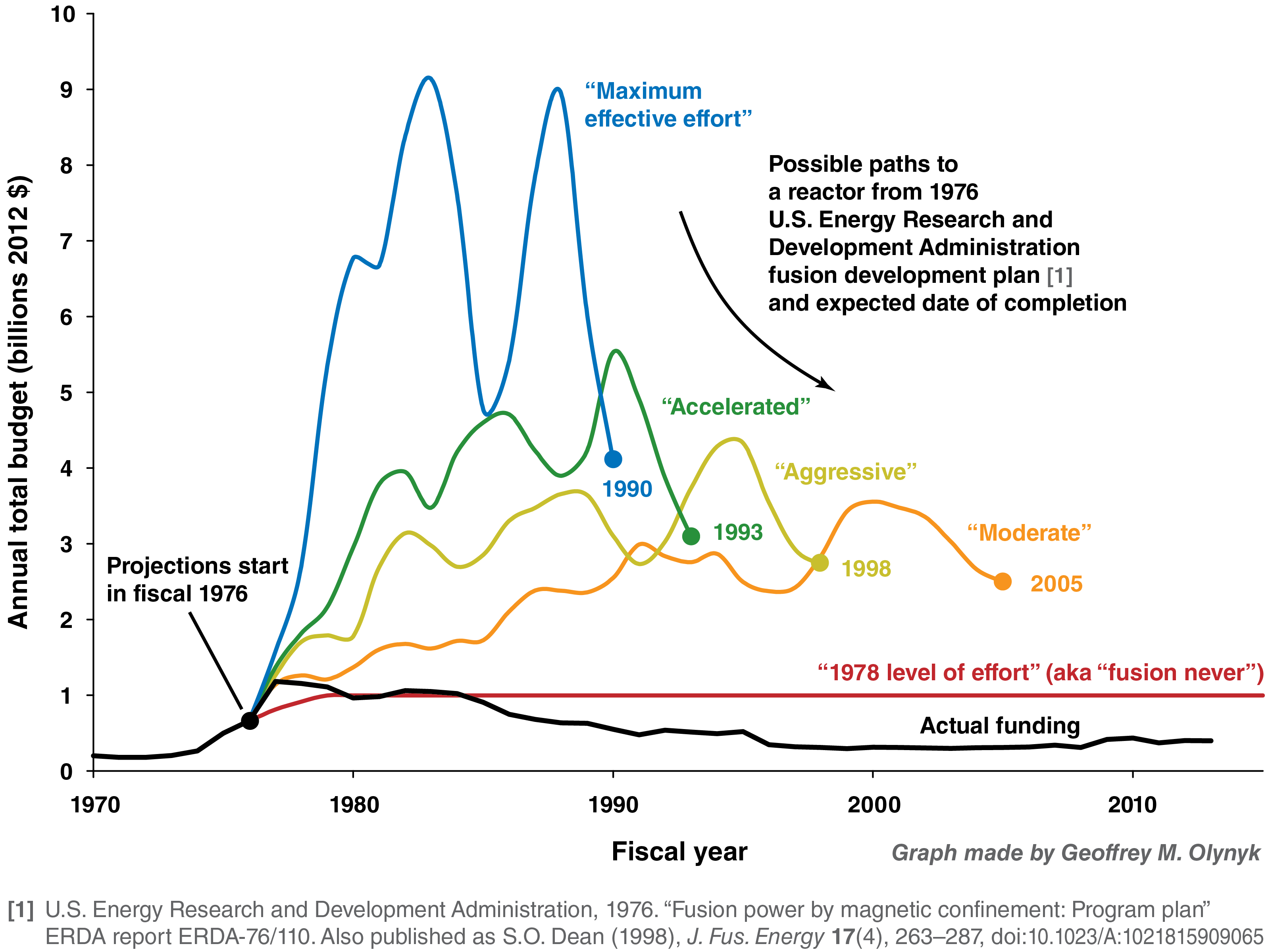

The more fund-strapped the money factory is, the tighter the alignment needs to be. When AT&T, Microsoft, or Google are flush with monopoly money, they're happy to let people piddle around on whatever they wish. When stocks dip, the first programs to get cut are the ones that have the least plausible ties to the core product. Expensive research needs to address an existential threat eventually at an organizational level to maintain support. Similarly, ARPA became DARPA in 1972 because of the increased scrutiny on military spending both in the government and outside of it.

Alignment requires existential threats

What does it actually mean for an innovation org to be aligned with its money factory? When not deciding what their chaotic neutral bard will do when confronted by the town guard, people often use "alignment" as a fuzzy suitcase word. It doesn't need to be stuffed to the point of meaninglessness though — as a useful concept it boils down to the blunt question: Is maintaining this relationship holding off some existential threat?

It's useful to think about alignment in terms of James Carse's concept of "finite games" and "infinite games." See Finite and Infinite Games . Each person and organization is playing different games — whether the game is exploring the world or maximizing profits. An existential threat is something that potentially brings your game to a crashing halt. Bluntly, the only way for two entities playing different games to have the same goal is if one or both of the games would end if that shared goal wasn't met. That is, the goal addresses an existential threat. In the (grossly oversimplified) case of industrial labs and corporations, corporations are often playing the "maximize profit" game. The labs are often playing the "create sweet technology" game. In reality, the two can only be aligned if the labs" intermediate goals either directly help the corporation maximize profit or enable the corporation to keep playing its game (by deflecting antitrust litigation, for example).

Of course, "existential threat" is an extreme term that means different things for different entities. You could think of an existential threat as an event or series of events that could end something that you really do not want to end. This "threatened thing" can vary wildly in different contexts. For companies, it could be an important revenue stream or the business itself. For an individual, it could be your life or just the trajectory of your career. Clearly, what is existential is relative, and there's a continuum of importance in the threats.

While it's abstract, I find this concept useful because it allows you to roughly analyze how "aligned" two entities are and have blunt conversations about it.

Expensive research needs to address an existential threat eventually at an organizational level to maintain the support it needs to be effective

If alignment requires existential threats and innovation orgs need to be aligned with their money factories , it follows that research efforts need to address an existential risk to maintain support. I want to dig into what that means practically.

A brief aside: I'm going to focus on the realities of how innovation organizations continue to get money in the door. It's easy for the discussion to go down the track of "Painkillers vs vitamins! If I'd asked people what they wanted, they would have said "a faster horse." Make things people want! Is physics worthwhile if it never becomes a product?" And so on. These are questions of "What should a research organization do?" which is important in its own right. However, the less-discussed question is "What should a research organization do to stay alive? "

I wish this section's header could be "Research organizations 35 need to address existential threats to maintain support." The statement is both simple and bold; there are plenty of examples of research orgs that struggled with support because they didn't really address an existential threat (Dynamicland and BP Venture Research come to mind). However it's also not true: There is a large class of research organizations that maintain support but continue to limp along ineffectively (Bell Labs still exists!) So perhaps "Effective research organizations need to address existential threats" is more accurate. This would explain the difference between ARPA-E and DARPA and why Bell Labs declined once it no longer staved off regulators, among other examples.

However! There are many examples of effective research organizations that did not address existential threats. In fact, most great scientists were not actually out to address existential threats — Galileo, Newton, Rutherford, Einstein, etc. They just managed to cobble together enough money from patrons or side hustles to keep going. Patreon-sponsored contemporaries are similar — I give some money to support a few researchers, but they aren't addressing any existential threat for me. The notable pattern is that these examples are all individuals or small groups. Aha! It suggests that perhaps there is a nebulous threshold below which effective research can work off of "throwaway" money and above which people start looking at money spent on research as "buying" something — we could call this point "expensive."

Another potential Achilles heel in this idea is the fact that often the work that convinces us outsiders that a research organization is effective is often some of the least existential-problem-addressing work that the organization does: transistors at Bell Labs, interactive computing at DARPA, etc. However, at the same time that it was inventing the transistor, Bell Labs was discovering better wire sheathings that saved AT&T billions of dollars, and DARPA was working out ways to detect nuclear explosions anywhere in the world at the same time that Licklider was seeding interactive computing groups across the US. This tension is worth noting because it's critical not to lash any single project too tightly to the importance of addressing existential threats at an organizational level.

So, the correct statement is perhaps that expensive research needs to address an existential threat eventually at an organizational level to maintain support. This is quite the mashup of nebulous words, isn't it? It's important enough that it's worthwhile to dig into each piece.

What does it mean for research to be "expensive'?

The line delineating "expensive" is relative and slippery, but there's definitely a line. In large part, it's psychological. Buying something expensive causes you to pause and consider. You care much more about its outcome. While your wealth level absolutely factors into what is expensive and not expensive, it's nothing like a 1:1 relationship. Someone who agonizes when cabbage prices go from $0.69/lb to $0.89/lb will buy a $500 phone without thinking about it. This same phenomenon happens with corporations, governments, investors, and philanthropists as well.

What does "eventually" entail?

Most organizations have a grace period to piddle around. However, like the border between expensive and not-expensive, the length of this grace period is extremely fuzzy and liable to change at a moment's notice. Usually, when it's confusing why a corporation or investor would be supporting work that is so far from a product, it doesn't mean you're missing something; it means the hammer is about to fall. It's almost indescribable, but there is a distinct sense you get from a startup or new research lab that suddenly runs into the end of its grace period — it goes from feeling like Willy Wonka's chocolate factory to ACME Chocolate Improvement Inc.

"Eventually" also raises a question about the frequency of hits that an organization needs to maintain, which seems to be a function of how big those hits are and how existential the threats they address actually are. DARPA has a 5–10% program success rate, which I suspect it can get away with because military superiority is very important to the US government, while most charities need to show progress every semi-annual fundraising season.

Why do existential threats need to be addressed at an organizational level?

The organization needs to address an existential threat to its money factory 36 rather than any individual project. Effective research organizations seem to build a portfolio of projects that address existential threats at a sufficient rate to maintain the perception that they're addressing existential threats.

This portfolio approach is clutch for several reasons. There is uncertainty around any given project as to whether it will address existential threats, and often, you can't honestly answer that question a priori without doing some work or strangling the project in the cradle. The book Loonshots introduces the concepts of "warty babies" and "the three deaths," which are incisive mental models of the phenomena that lead to project-cradle-death. Additionally, the projects that directly address existential threats for funders and those that are most interesting or globally impactful are often disjointed. AT&T didn't overwhelmingly benefit from the transistor or the cell phone, nor did Xerox benefit from the GUI. In the same way that winners pay for duds in a VC portfolio, aligned work can cover for misaligned work if the alignment is considered at an organizational instead of a project level.

If the organization produces aligned hits at a sufficient rate, it ideally can create a trusted hierarchy where the trust (and money) is flowing down the power ladder. Congress trusts the DARPA director, who trusts the deputy director, who trusts the PM. The PM doesn't need to get Congress's permission to start a program, just the permission of the deputy director. 38 This is why opacity is important to DARPA's outlier success — it can work on crazy ideas that would never get a priori funding from Congress, but it needs to continually renew the trust to maintain that opacity by delivering as an organization.

Another way this manifests is through contract research organizations that fund themselves through contracts or grants but use that money to fund internal research.

Industrial labs no longer fill the niche they did in the early-to-mid 20th century

In the past, industrial labs filled a particular niche in the innovation ecosystem. There's a strong sense that, as of 2021, corporate R&D organizations 39 no longer fill that niche. However, these organizations (including Xerox PARC and Bell Labs ) still exist, so we need a strong argument that they no longer fill the solutions R&D niche.

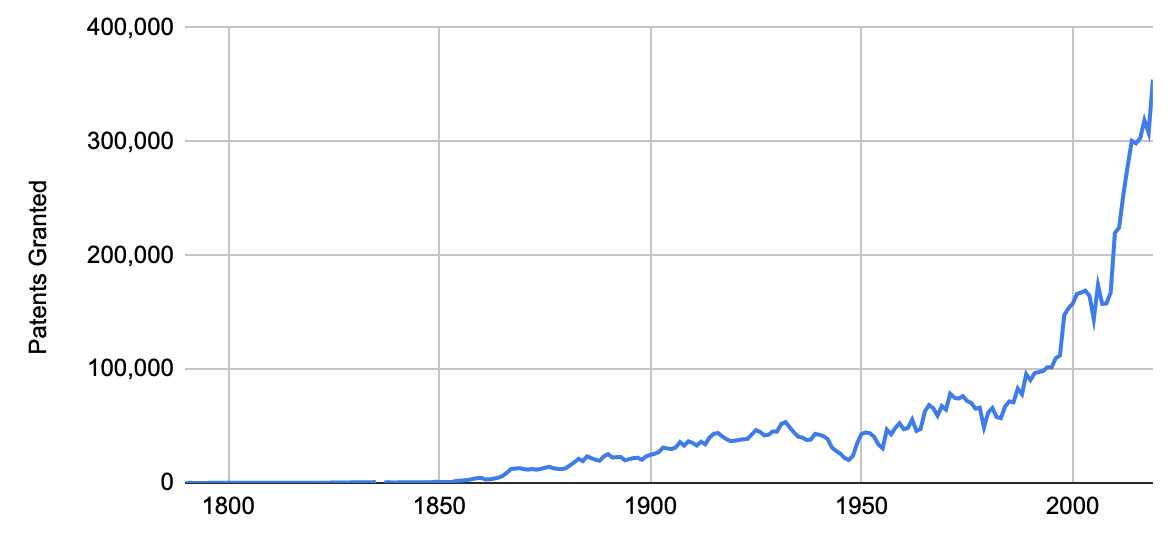

I'll proceed by both looking at outputs and inputs. Industrial labs are no longer producing the real-world outcomes they once did. However, that argument has a causality problem — it could be that labs are actually filling the same niche and a confounder is causing decreased output. This confounder could be the same thing driving stagnation in general. For example, if we really have picked a finite amount of low-hanging fruit and Robert Gordon is right that the explosion of growth between the late 19th and mid 20th century was a one-off event. I want to argue that, instead, the conditions that enabled industrial labs in particular to do great work no longer exist.

There are many exceptions! AI research in particular is a glaring exception to the decline of industrial labs, but there are many potentially amazing corporate R&D projects happening around the world. 40 One might argue that the exceptions are the rule — that there are just fewer technologies that would benefit from healthy industrial labs (remember the low-hanging fruit confounder). It's impossible to prove definitively, but I want to argue that the niche industrial labs once occupied still needs to be filled .

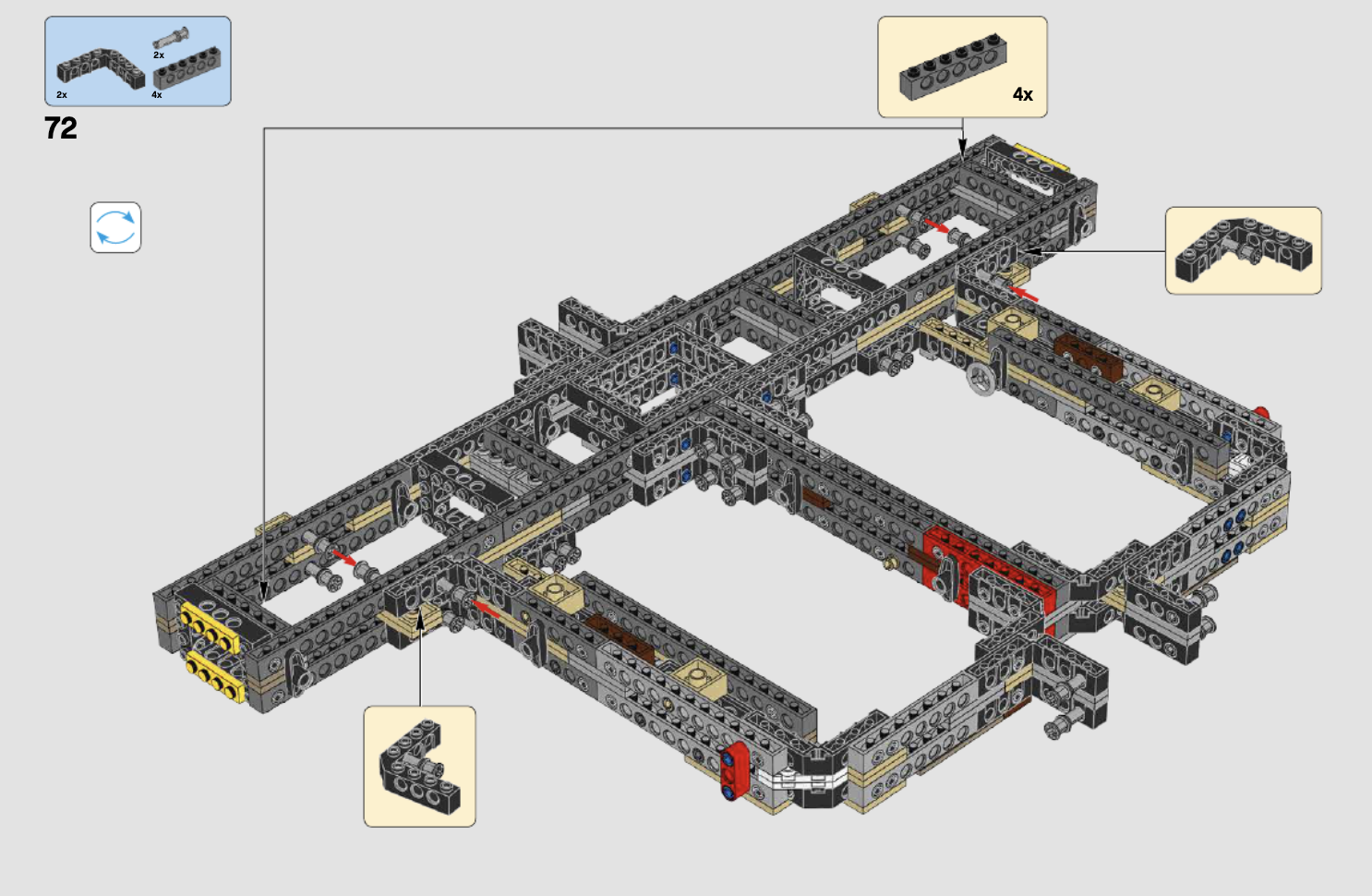

Going through the characteristics of the niche we discussed previously :